Blog

-

Llama 2-13B now available on Amazon Bedrock

Meta’s Llama 2 Chat Model (13B) is now available on Amazon Bedrock! Amazon Bedrock has introduced a groundbreaking service as the first public cloud platform to offer a fully managed API for Llama 2, Meta’s advanced LLM. This development allows entities of various sizes to utilize the Llama 2 Chat models through Amazon Bedrock, eliminating…

-

Speed Up Inference on Llama 2

This blog post explores methods for enhancing the inference speeds of the Llama 2 series of models with PyTorch’s built-in enhancements, including direct high-speed kernels, torch compile’s transformation capabilities, and tensor parallelization for distributed computation. We’ve achieved a latency of 29 milliseconds per token for individual requests on the 70B LLaMa model, tested on eight…

-

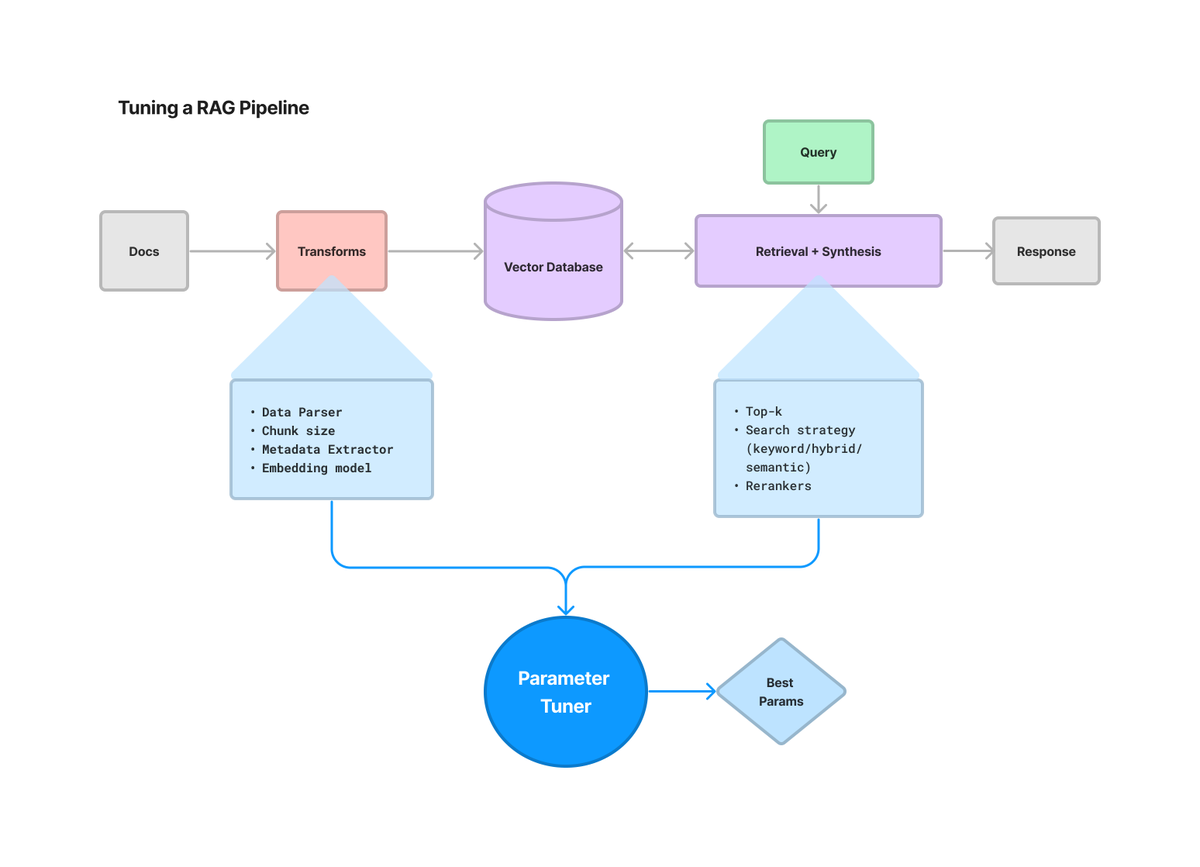

Hyperparameter Tuning for RAG

A HUGE issue with building LLM apps is there’s way too many parameters to tune and it extends way beyond prompts: chunking, retrieval strategy, metadata, just to name a few. LlamaIndex have a full notebook guide showing you how to optimize a sample RAG pipeline w/ 1) chunk size, and 2) top-k. Try it out…

-

Llama 2 for Enterprise

Dell has expanded its hardware offerings with the inclusion of support for the Llama 2 models in its Dell Validated Design for Generative AI and on-site generative AI solutions. Meta introduced Llama 2 in July, garnering support from several cloud services, including Microsoft Azure, AWS, and Google Cloud. However, Dell’s initiative stands out as it…

-

Getting started with Llama-2

This manual offers guidance and tools to assist in setting up Llama, covering access to the model, hosting, instructional guides, and integration methods. It also includes additional resources to support your work with Llama-2. Acquiring the Models Hosting Options Amazon Web Services (AWS) AWS offers various hosting methods for Llama models, such as SageMaker Jumpstart,…

-

Open LLMs on GKE – Llama 2 and Beyond

Are you looking to take your GenAI platform game to the next level? LLaMa-2 is a powerful open source language model that can be fine-tuned on your own custom dataset to perform a variety of tasks, such as text generation, translation, and summarization. Combined with Google Kubernetes (GKE), the most scalable Kubernetes platform for running…

-

How to install LLaVa LLM Locally

You can install LLaVA LLM for free on your local host. It’s free, open-source, and includes a vision feature. Let us show you how. I found LLaVA last week and decided to try it out. I tested it in different ways and was really impressed with it. I saw many people asking, “How can I…

-

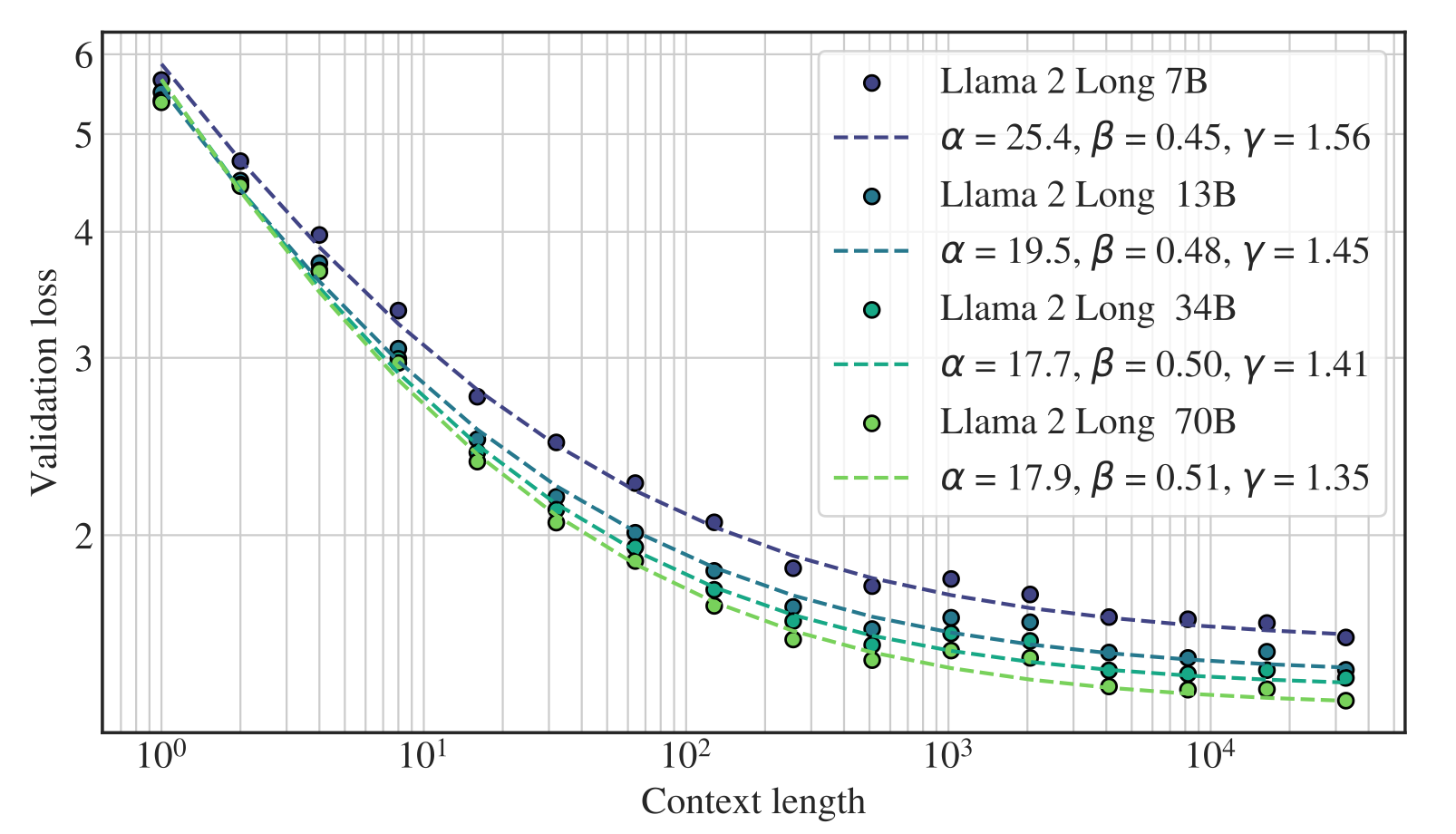

Llama-2 Keeps Getting Better

Llama-2 Keeps Getting Better. We present a series of long-context LLMs that support effective context windows of up to 32,768 tokens. Our model series are built through continual pretraining from LLAMA 2 with longer training sequences and on a dataset where long texts are upsampled. We perform extensive evaluation on language modeling, synthetic context probing tasks,…

-

Llemma LLM

Llemma LLM – a Language Model for Mathematics. The model was kick-started with weights from Code Llama 7B and underwent training on Proof-Pile-2 for a duration covering 200B tokens. There’s also a variant of this model boasting 34B parameters, dubbed Llemma 34B. Performance Insights Llemma models excel in sequential mathematical thinking and are adept at…