You can install LLaVA LLM for free on your local host. It’s free, open-source, and includes a vision feature. Let us show you how.

I found LLaVA last week and decided to try it out. I tested it in different ways and was really impressed with it.

I saw many people asking, “How can I download LLaVa LLM, install and use on my local machine?”. It’s a good question because you can’t use it if you don’t download it.

Quick steps to follow:

- Visit their GitHub repository.

- A few versions are offered: LLaVA 7B 8bit and LLaVA 7B 16bit.

- The 8-bit version is compatible with the free Google Colab, while the 16-bit requires Google Colab Pro for additional RAM.

Using Google Colab for LLaVA

• Save a copy to your Drive (which is a common step).

• Change the runtime type to ‘T4 GPU‘.

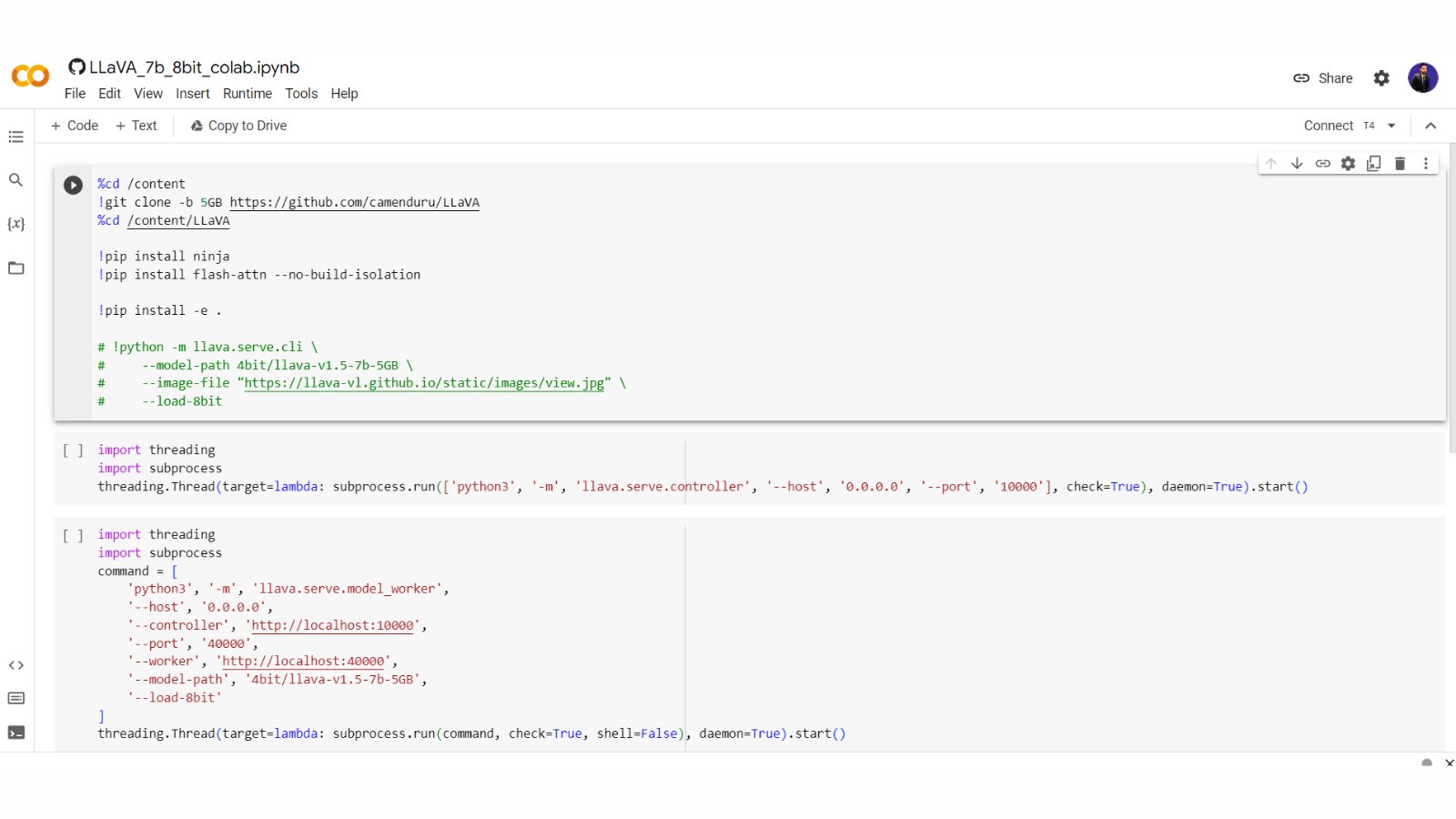

• Run the code:

– Clone the “LLaVA” GitHub repository

– Use the Python subprocess module to run the LLaVA controller

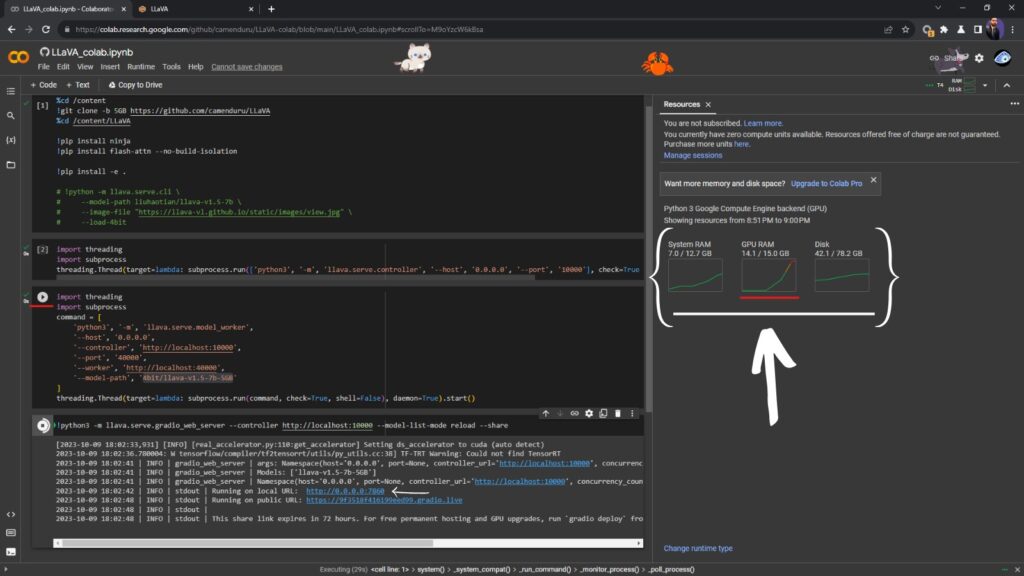

• Keep an eye on RAM and GPU usage during installation.

• Start a Gradio web server:

– The model runs on a specific port

– A public IP address is provided for access After the installation process, you should see this:

That’s it! “You’ll find the localhost link there after installation” – Click it and enjoy it!

Credits: Haider.

Read related articles: