Blog

-

LLama-3 LLM

Meta’s Llama 3 appears to be coming our way in July, and there is some interesting information about how they’re thinking differently about it. We’ve gotten some interesting information about Llama 3 in the last couple of months. Meta CEO Mark Zuckerberg has talked about it a little bit more. Some of the things that…

-

LlamaParse

LLamaIndex Team is excited to officially launch LlamaParse, the first genAI-native document parsing solution. Not only is it better at parsing out images/tables/charts than virtually every other parser, it is now steerable through natural language instructions – output the document in whatever format you desire! It is also the only parsing solution that seamlessly allows…

-

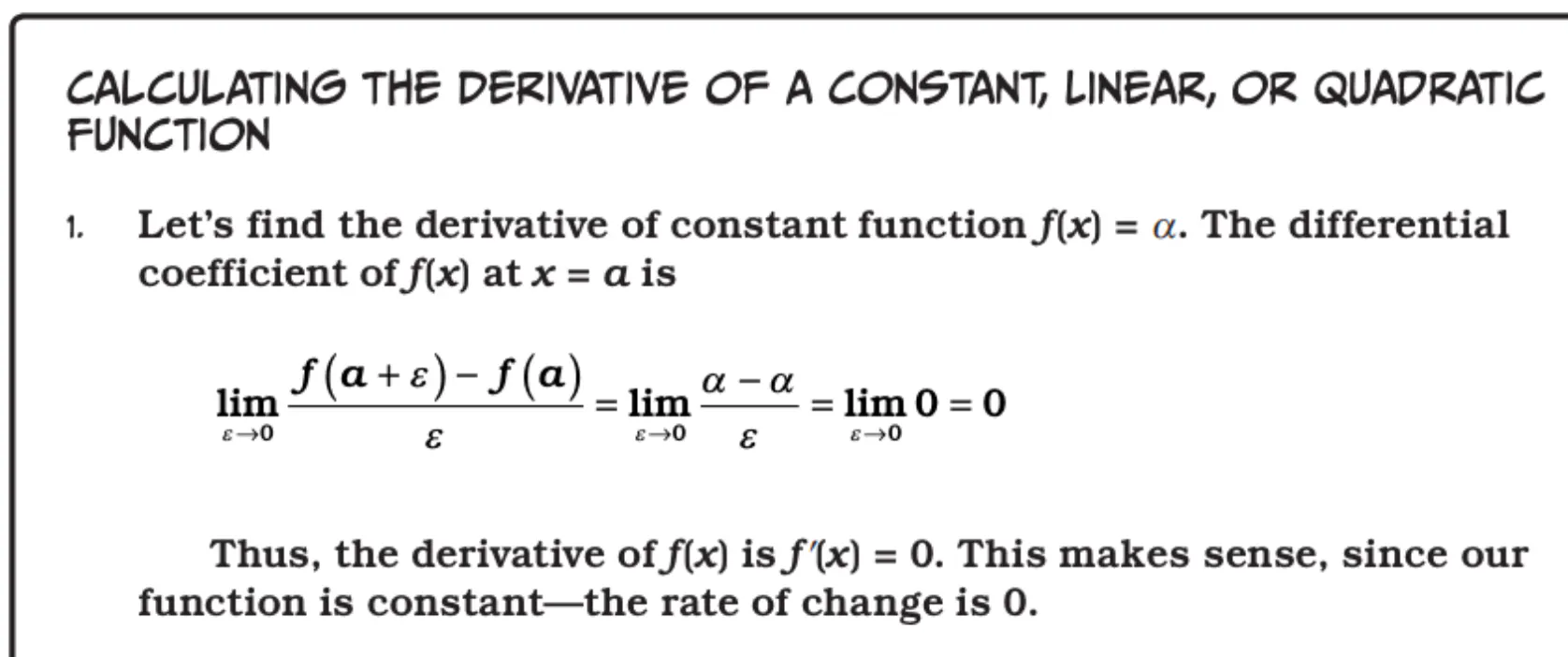

How to Customize LlamaIndex RAG Application

Here’s a minimal implementation of a Document Chat RAG application, all in just 6 lines of code! Now let’s customize it step by step. I want to parse my documents into smaller chunks. I want to use a different vector store: First, you can install the vector store you want to use. For example, to…

-

How Does Llama-3 Outwit GPT-4

Revolutionizing our digital experiences with groundbreaking multimodal capabilities, the answer is yes. Thanks to Meta’s ambitious introduction of Llama 2 in July, a new standard in AI development has been set. In today’s article, we’ll dive deep into how Meta’s Llama 3 is reshaping the future of AI, outwitting its competitors and even human intelligence…

-

Run LLama-2 on Groq

Groq is insanely fast, and we’re excited to feature an official integration with LlamaIndex. The @GroqInc LPU is specially designed for LLM generation and currently supports llama-2 and Mixtral models. About Groq Welcome to Groq! 🚀 Here at Groq, we are proud to introduce the world’s inaugural Language Processing Unit™, or LPU. This groundbreaking LPU…

-

LlamaParse

Today marks a significant milestone in the LlamaIndex ecosystem with the unveiling of LlamaCloud, the latest offering in managed parsing, ingestion, and retrieval services. This innovation is tailored to enhance the capabilities of your LLM and RAG applications by providing them with production-level context-augmentation. LlamaCloud enables enterprise AI engineers to concentrate on developing business logic…

-

LLaMA Code Assistant

Coding assistants have revolutionized how developers work globally, offering a unique blend of convenience and efficiency. However, a common limitation has been their reliance on an internet connection, posing a challenge in scenarios like flights or areas without internet access. Enter an innovative solution that addresses this issue head-on: the ability to utilize a coding…

-

CodeLlama 70B

CodeLlama-70B-Instruct achieves 67.8 on HumanEval, making it one of the highest performing open models available today. CodeLlama-70B is the most performant base for fine-tuning code generation models and we’re excited for the community to build on this work. Code Llama 70B models are available under the same license as Llama 2 and previous Code Llama…

-

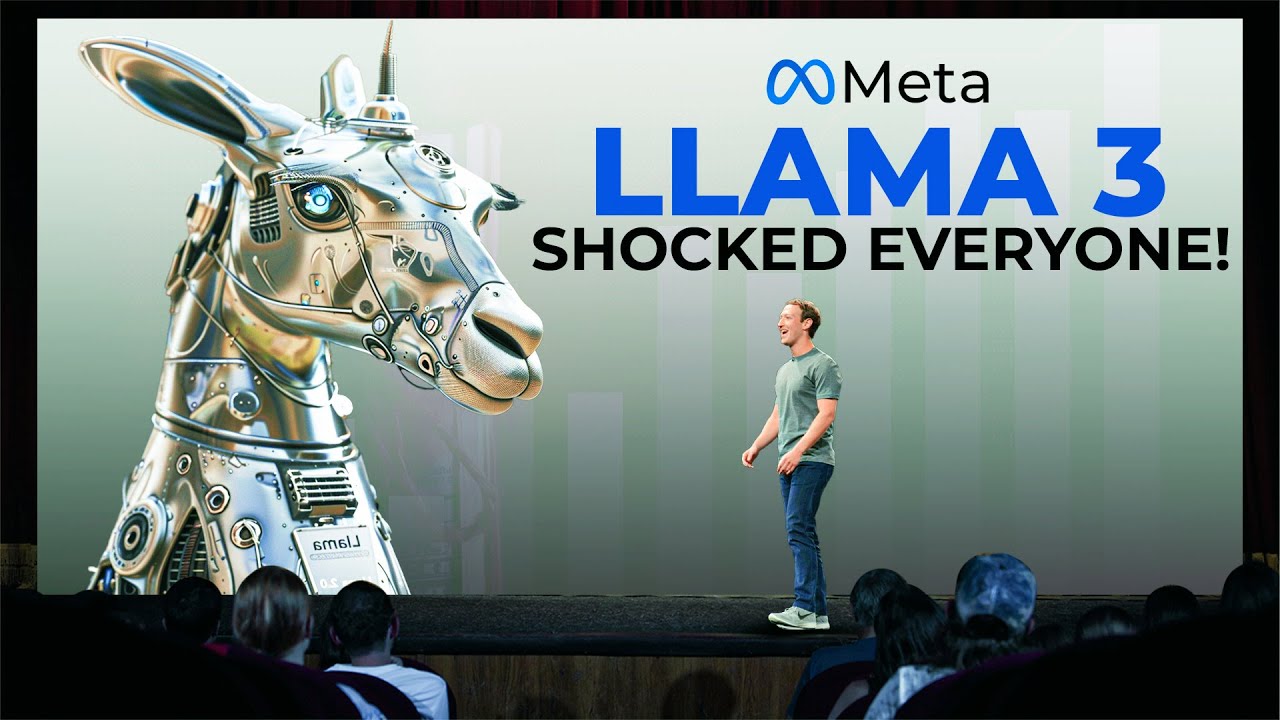

Llama Index Roadmap

Llama Index Roadmap. We have big plans in 2024 to make the: Stay on top of the AI Ecosystem This is a living document (last updated – today) and will change month by month. Check it out on our Github discussions page! https://github.com/run-llama/llama_index/discussions/9888 Let us know your feedback/thoughts, and check out our contributing guide if…