It’s been approximately seven months since Meta released Llama 1 and just a few months since the introduction of Llama 2, which was soon followed by the launch of Code Llama. The feedback from the community has been overwhelming. There’s been significant momentum and innovation, with over 30 million downloads of Llama-based models via HuggingFace and more than 10 million of those in the past 30 days. Llama, akin to PyTorch, has transformed into a platform for global development, and the excitement is palpable.

Impact to Date

The growth of the Llama community is underscored by several notable developments:

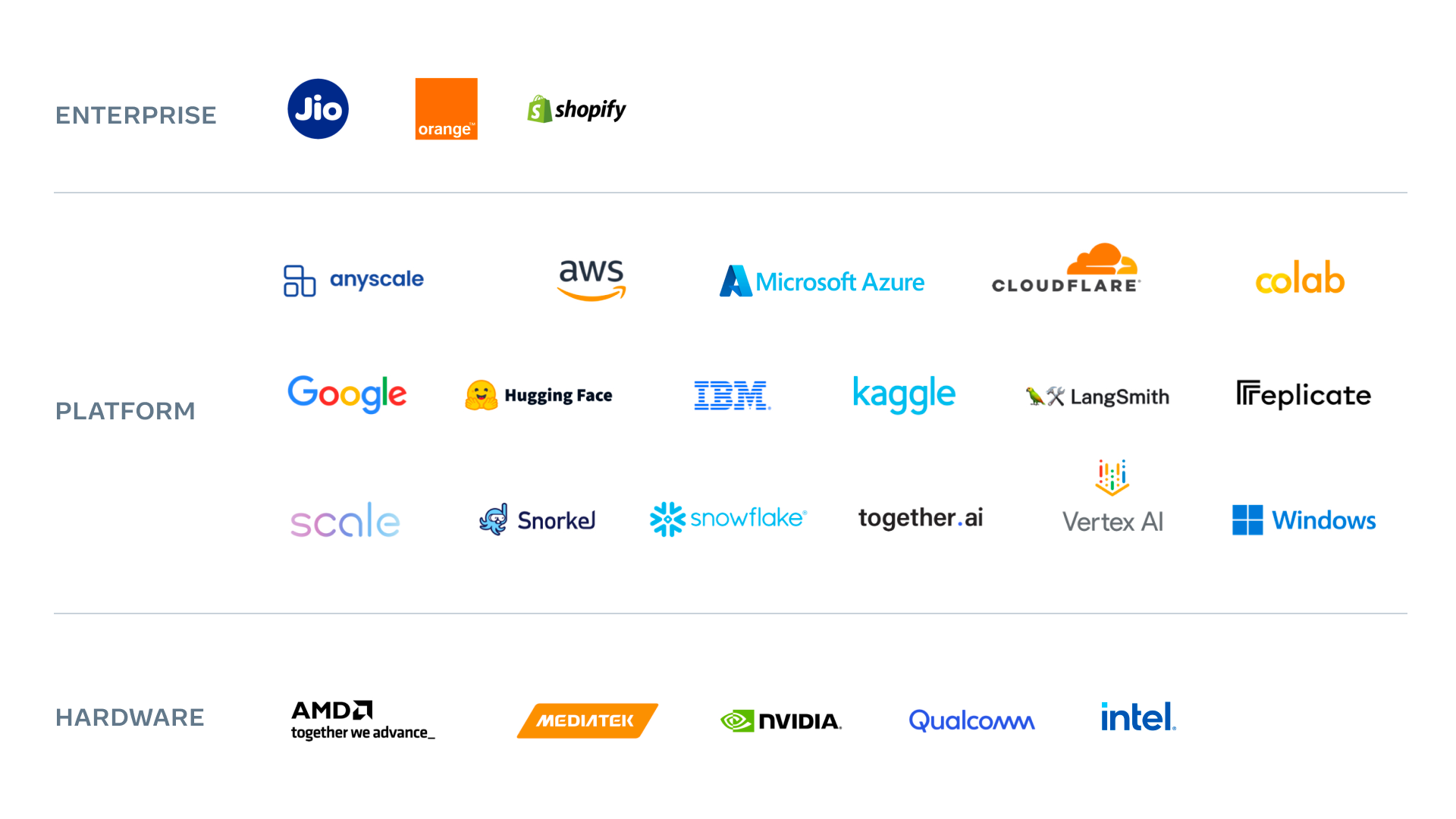

- Cloud usage: Leading platforms like AWS, Google Cloud, and Microsoft Azure have integrated Llama models. The presence of Llama 2 in the cloud is growing. Recently, AWS was announced as the first managed API partner for Llama 2. This means organizations can utilize Llama 2 models on Amazon Bedrock without managing the infrastructure. The uptake by Google Cloud and AWS has been impressive, with over 3,500 enterprise projects initiated using Llama 2 models.

- Innovators: Numerous startups and innovators are adopting Llama as the core of their generative AI innovations. Many startups, including Anyscale, Replicate, Snowflake, LangSmith, Scale AI, and others, are exploring Llama 2. Companies like DoorDash are also testing it extensively before launching new LLM-driven features.

- Crowd-sourced optimization: The open-source community has warmly welcomed Meta’s models. So far, over 7,000 derivatives have been fine-tuned and released on Hugging Face. These derivatives have enhanced performance on benchmarks by an average of nearly 10%, with some reaching improvements of up to 46% on datasets like TruthQA.

- Developer community: There are currently over 7,000 GitHub projects based on or mentioning Llama. New tools, libraries, evaluation methods, and even compact versions of Llama are being crafted to extend its reach to edge devices and various platforms. The community has also broadened Llama’s capabilities, including support for larger context windows, more languages, and much more.

- Hardware support: Major hardware platforms like AMD, Intel, Nvidia, and Google have optimized Llama 2’s performance through both hardware and software enhancements.

The Llama Ecosystem is thriving, with stakeholders at every level, from hardware providers to cloud platforms and startups.

Also, with the recent launch of Code Llama, these models were swiftly made available on many platforms, boosting the community’s pace.

Origins in Research

In recent years, large language models (LLMs) have showcased capabilities like generating creative text, solving mathematical problems, predicting protein structures, and more. These projects exemplify the vast potential of AI.

The initial project, LLaMA or Llama 1, was crafted at FAIR by a team primarily focused on formal mathematics. They recognized the potential of LLMs and how a smaller model, with the right scaling laws and curated data, could serve as a robust foundation for research applications. This led to the birth of the first Llama iteration, which ignited innovation globally. Within days, academic researchers were able to refine Llama 1, enhancing its capabilities. This spurred the community to innovate in various ways.

Meta’s goal was to make this technology more widely accessible, leading to the development of Llama 2.

Reason for Model Release

Meta has a strong belief in the power of the open-source community. They feel that cutting-edge AI technology is safer and better when it’s open to all.

For Meta, the value of releasing their models can be summarized in three main areas:

- Research: New techniques, tools, and methods, especially in safety, allow Meta to quickly integrate insights from the research community.

- Enterprise and commercialization: As more businesses and startups utilize their technology, Meta gains insights into use cases, safe deployment, and potential opportunities.

- Developer ecosystem: LLMs have revolutionized AI development, with new tools and methods emerging daily. Having a common language with the community enables Meta to swiftly adopt these technologies.

This approach is consistent with Meta’s history and philosophy.

The Path Forward

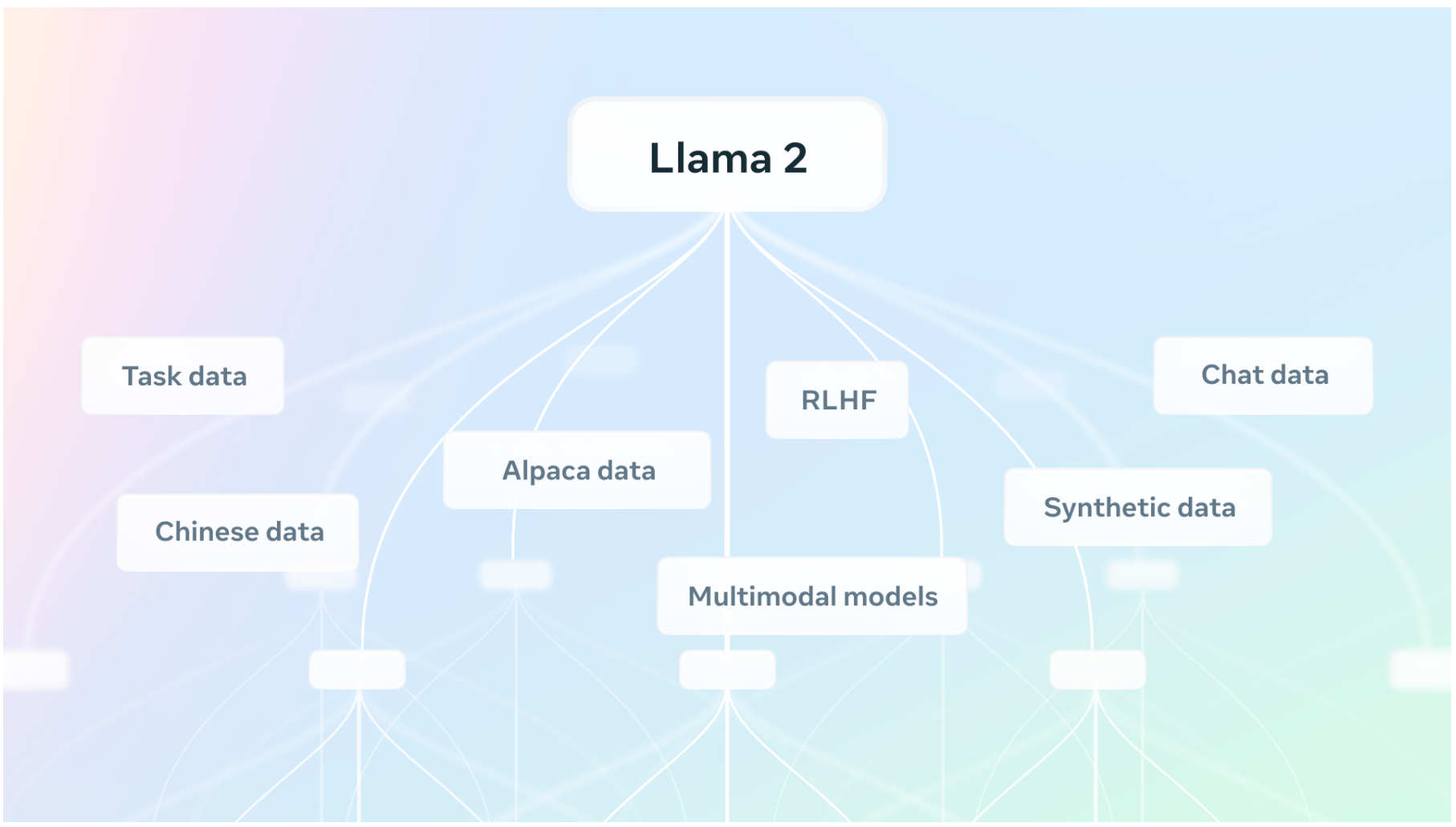

The generative AI domain is evolving rapidly, and everyone is discovering the potential and applications of this technology together. Meta remains dedicated to an open approach for modern AI. Their focus areas include:

- Multimodal: AI should embrace various modalities for more immersive generative experiences.

- Safety and responsibility: Emphasis on safety and responsibility will grow, with the development of new tools and partnerships.

- Community focus: Like PyTorch, Meta views this as a developer community with a voice. They aim to offer platforms for showcasing work, contributing, and sharing stories.

Exploring the Llama Family Further

During the Meta Connect keynote, there was extensive discussion about their Llama models and the future of open access. They are eager to share their latest advancements.

For those interested in delving deeper:

- Download and interact with Llama 2.

- Attend Connect Sessions and workshops focused on Llama models.

- Visit ai.meta.com/llama to access papers, guidelines, policies, and learn about partners supporting the Llama ecosystem.

Read related articles: