Meta release Code Llama, a family of large language models for code based on Llama 2 providing state-of-the-art performance among open models, infilling capabilities, support for large input contexts, and zero-shot instruction following ability for programming tasks. Meta provide multiple flavors to cover a wide range of applications: foundation models (Code Llama), Python specializations (Code Llama – Python), and instruction-following models (Code Llama – Instruct) with 7B, 13B and 34B parameters each. All models are trained on sequences of 16k tokens and show improvements on inputs with up to 100k tokens. 7B and 13B Code Llama and Code Llama – Instruct variants support infilling based on surrounding content.

Code Llama reaches state-of-the-art performance among open models on several code benchmarks, with scores of up to 53% and 55% on HumanEval and MBPP, respectively. Notably, Code Llama – Python 7B outperforms Llama 2 70B on HumanEval and MBPP, and all our models outperform every other publicly available model on MultiPL-E. Meta release Code Llama under a permissive license that allows for both research and commercial use.

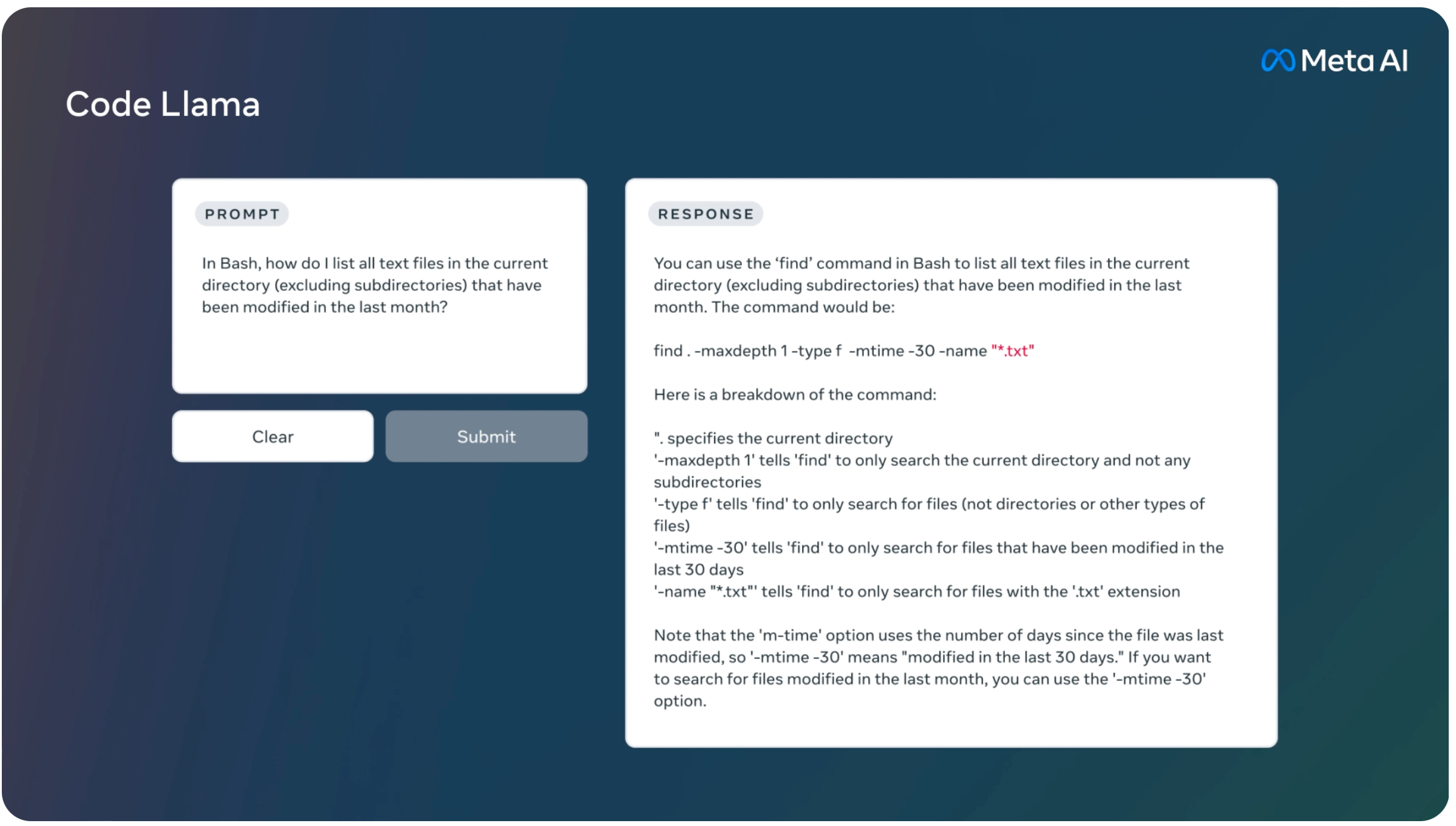

Below we list part of thee Code Llama Model card document.

The Code Llama models family

Code Llama

The Code Llama models constitute foundation models for code generation. They come in three model sizes: 7B, 13B and 34B parameters. The 7B and 13B models are trained using an infilling objective (Section 2.3), and are appropriate to be used in an IDE to complete code in the middle of a file, for example. The 34B model was trained without the infilling objective. All Code Llama models are intialized with Llama 2 model weights and trained on 500B tokens from a code-heavy dataset (see Section 2.2 for more details). They are all fine-tuned to handle long contexts as detailed in Section 2.4.

Code Llama – Python

The Code Llama – Python models are specialized for Python code generation and also come in sizes of 7B, 13B, and 34B parameters. They are designed to study the performance of models tailored to a single programming language, compared to general-purpose code generation models. Initialized from Llama 2 models and trained on 500B tokens from the Code Llama dataset, Code Llama – Python models are further specialized on 100B tokens using a Python-heavy dataset. All Code Llama – Python models are trained without infilling and subsequently fine-tuned to handle long contexts.

Code Llama – Instruct

The Code Llama – Instruct models are based on Code Llama and fine-tuned with an additional approx. 5B tokens to better follow human instructions. More details on Code Llama – Instruct can be found in Section 2.5.

Dataset

We train Code Llama on 500B tokens during the initial phase, starting from the 7B, 13B, and 34B versions of Llama 2. As shown in Table 1, Code Llama is trained predominantly on a near-deduplicated dataset of publicly available code. We also source 8% of our samples data from natural language datasets related to code. This dataset contains many discussions about code and code snippets included in natural language questions or answers.

To help the model retain natural language understanding skills, we also sample a small proportion of our batches from a natural language dataset. Data is tokenized via byte pair encoding, employing the same tokenizer as Llama and Llama 2. Preliminary experiments suggested that adding batches sampled from our natural language dataset improves the performance of our models on MBPP.

Training details

Optimization

Our optimizer is AdamW (Loshchilov & Hutter, 2019) with β1 and β2 values of 0.9 and 0.95. We use a cosine schedule with 1000 warm-up steps, and set the final learning rate to be 1/30th of the peak learning rate. We use a batch size of 4M tokens which are presented as sequences of 4,096 tokens each. Despite the standard practice of using lower learning rates in fine-tuning stages than in pre-training stages, we obtained best results when retaining the original learning rate of the Llama 2 base model. We carry these findings to the 13B and 34B models, and set their learning rates to 3e−4 and 1.5e−4, respectively. For python fine-tuning, we set the initial learning rate to 1e−4 instead. For Code Llama – Instruct, we train with a batch size of 524,288 tokens and on approx. 5B tokens in total.

Long context fine-tuning

For long context fine-tuning (LCFT), we use a learning rate of 2e−5, a sequence length of 16,384, and reset RoPE frequencies with a base value of θ = 106. The batch size is set to 2M tokens for model sizes 7B and 13B and to 1M tokens for model size 34B, respectively. Training lasts for 10,000 gradient steps by default. We observed instabilities in downstream performance for certain configurations, and hence set the number of gradient steps to 11,000 for the 34B models and to 3,000 for Code Llama 7B.

Read related articles: