Navigating large language models like Llama 2 requires both creativity and technical know-how. This article delves deep into the intricacies of Llama 2, shedding light on how to best structure chat prompts. In this article we will discuss prompting Llama 2, selecting the right Llama model, choosing between Llama, ChatGPT and Claude AI, the role of system prompts, and other essential tips.

Mastering Llama 2 is an ongoing process, but by the end of this guide, you’ll be on your way to becoming a Llama expert.

System Prompts

A system prompt is a piece of text attached to the start of a prompt, often used to guide or limit the model’s responses.

Imagine wanting a chatbot with a pirate’s voice. An approach would be adding “you are a pirate” before each prompt. However, that becomes repetitive. Instead, by setting a system prompt like “You are a pirate,” the model can interpret your intent without constant reminders.

System prompts can also steer Llama towards more professional responses. Phrases such as “Respond as if you’re addressing technical queries” work wonders. Llama 2’s efficiency with system prompts isn’t coincidental.

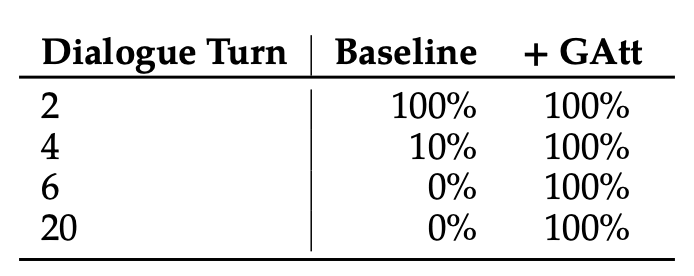

The Llama 2 study revealed early versions often overlooked the instruction after a few interactions. To tackle this, the Ghost Attention (GAtt) method was employed. Thanks to GAtt, Llama 2’s adherence to system prompts has improved significantly.

Nevertheless, after about 20 interactions, even Llama with GAtt stumbles due to reaching the context window’s limits.

Why is it relevant?

In chatbot applications, controlling the model’s behavior is crucial. System prompts are your key to this control, dictating Llama 2’s persona or response boundaries. Keep them concise as they count towards the context window. Using system prompts is more intuitive than algorithmic, so feel free to experiment. Remember: the world is as limitless as a Llama’s imagination.

Formatting Chat Prompts

Signify user input using [INST] [/INST] tags.

When designing a chat with Llama, demarcate user input starting with [INST] and concluding with [/INST]. Model replies remain untagged.

For instance:

[INST] Hi! [/INST] Hello! How can I assist? [INST] I'm well, can you help with something? [/INST]

But what if you opted for “User: Assistant:” instead of [INST] tags? At first, results seem okay, but as the conversation lengthens, Llama starts appending “Assistant:” to all responses because it specifically recognizes the [INST] tags.

Understanding Context Windows

Tokens are text units that models read. For humans, we see words, but models perceive tokens, with one token equating to roughly three-fourths of an English word.

A context window is a model’s token limit per task, much like its short-term memory.

With a 4096-token context window, Llama 2 can manage approximately 300 words. Exceed this, and you’ll hit a wall while trying prompting Llama 2.

Weight Differences: 7b vs. 13b vs. 70b

As Llama 2’s weight amplifies, it becomes more informed but slower, reminiscent of real Llamas.

- Llama 2 7b: Quick but basic. Ideal for summaries.

- Llama 2 13b: Balances speed and comprehension. Great for creative endeavors.

- Llama 2 70b: The most informed variant. Perfect for in-depth tasks.

We’ve explained the difference in details in Llama 2 model sizes (7B, 13B, 70B) article.

Chat vs. Base Variants

Meta released two Llama 2 weight sets: chat and base. The chat model, fine-tuned for dialogue. However, the base model has its merits.

Is Llama 2 better than ChatGPT and Claude AI?

Llama 2 offers advantages that aren’t highlighted in its direct comparison with ChatGPT or Claude AI. Firstly, it’s open-source, giving you full control over the model’s weights and code. This ensures the model’s performance remains consistent.

Additionally, your information isn’t transmitted or retained on OpenAI’s servers. Plus, with the capability to operate Llama 2 on local devices, you can achieve consistency between development and production stages. You can even utilize Llama without needing online connectivity!

Useful Tips

- Tweak the temperature to adjust randomness.

- Inform Llama about potential tools for surprising results.

- Llama 2 shines in areas even ChatGPT falters.

In a nutshell:

- Structure chat prompts with [INST] [/INST].

- Truncate prompts exceeding the context window.

- Direct Llama with system prompts.

- For factual questions, Llama 2’s 70b trumps GPT 3.5. Plus, its open-source nature brings extra advantages.

- Vary the temperature and familiarize Llama with tools.

- Dive in and explore Llama 2, and share your experiences.

Read more related topics: