Recent developments in generative AI have unveiled its capability to transform our daily lives and professional environments. At the forefront of this change is Meta’s creation, Llama 2. As a testament to its potential, Microsoft extended its collaboration with Meta to support the Llama 2 family of models on platforms such as Azure and Windows.

Llama 2 represents Meta’s next evolutionary step in large language models. This model offers developers and companies a robust platform to craft generative AI-driven tools and solutions. In this partnership, Microsoft proudly stands as Meta’s chosen ally and ensure the seamless integration of the latest Llama 2 variant for commercial consumers.

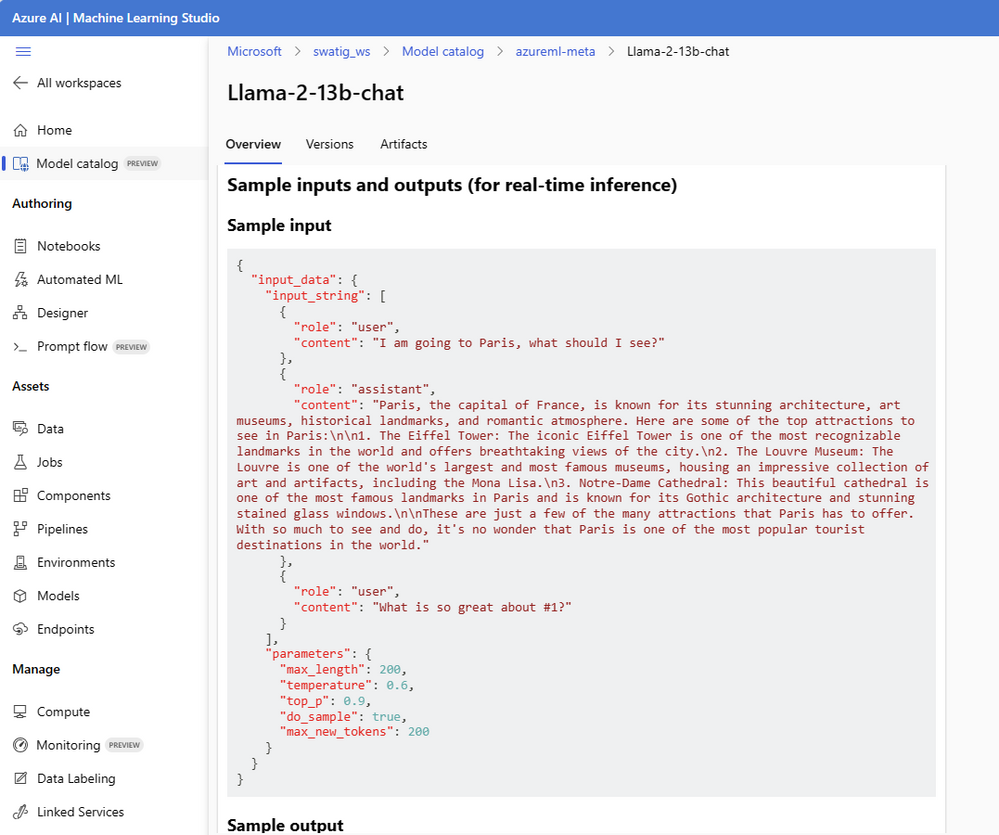

Now, enthusiasts and professionals can access Llama 2 on Azure Machine Learning’s model catalog. This catalog, still under public review, serves as a central point for foundational models, providing users a straightforward path to discover, tailor, and implement these extensive foundational models. The addition of Llama 2 into Azure’s repository allows easy utilization without fussing over infrastructure or compatibility concerns.

This support encompasses model refinement and evaluation and incorporates optimizer tools like DeepSpeed and ORT (ONNX RunTime). Moreover, users benefit from LoRA (Low-Rank Adaptation of Large Language Models), significantly optimizing memory and computational demands. Any deployment of Llama 2 on Azure inherently includes Azure AI Content Safety, reinforcing a responsible and secure AI practice.

Starting with Llama 2 on Azure

Navigate to the model catalog, where models are systematically categorized. By selecting the ‘Introducing Llama 2’ tile or filtering the ‘Meta’ collection, users can delve into Llama 2. This collection houses pre-established and refined versions of the Llama 2 text generation models. For those keen on dialogue-driven applications, the Llama-2-chat variants are tailor-made.

The model card is a comprehensive resource detailing training data, capabilities, restrictions, and pre-included mitigations from Meta. This card equips users to ascertain the model’s aptness for specific projects. For refining outputs, users can adjust parameters like ‘temperature‘, ‘top_p‘, and ‘max_new_tokens‘ to influence the model’s responsiveness and creativity.

It’s crucial to recognize that utilizing Llama 2 models demands specific compute capabilities: GPU compute of V100 / A100 SKUs. Details regarding compatible SKUs for each model can be found beside the compute selection within the respective wizards here.

Azure ML offers flexibility in model customization, with options to modify parameters like epochs, learning rate, and batch size. Users can further employ optimizations like LoRA, DeepSpeed, and ORT to streamline and conserve resources.

Safety is paramount when deploying AI solutions. Microsoft incorporates an added AI-driven safety layer, Azure AI Content Safety, to offer an extra tier of protection against undesirable outputs. By default, deploying any Llama 2 model will invoke the Azure AI Content Safety feature to curate content for users.

Prompt flow in Azure Machine Learning exemplifies the significance of prompt engineering in the generative AI realm. By integrating Llama 2, users can maximize the model’s capabilities and conceive powerful AI solutions tailored for specific needs.

Conclusion

In sum, by incorporating Llama 2 models into Azure Machine Learning’s repertoire, Microsoft reaffirms its dedication to democratizing AI via top-tier LLMs. Their hope is to usher in a new era where every entity, regardless of size or expertise, can tap into the power of generative AI. They eagerly await the innovations birthed from Llama 2.

For those eager to begin, here’s how:

- Dive into Llama 2 via Azure AI. Sign up for Azure AI for free and explore Llama 2.

- Further insights into the Meta and Microsoft collaboration are available here.

- Comprehensive guides on the model catalog and prompt flow within Azure Machine Learning can be explored here.