Coding assistants have revolutionized how developers work globally, offering a unique blend of convenience and efficiency. However, a common limitation has been their reliance on an internet connection, posing a challenge in scenarios like flights or areas without internet access. Enter an innovative solution that addresses this issue head-on: the ability to utilize a coding assistant entirely offline. In this exploration, we will delve into the setup of such a system, leveraging a local model powered by LLaMA Code Assistant technology, a feature highly sought after for its autonomy and local operation capabilities.

We’re introducing CodeLLama, or simply Llama 2, a groundbreaking open-source project that brings the power of autocompletion and coding assistance to your local machine. This tool, sponsored by Cody, allows for seamless integration with your development environment, providing instant coding suggestions without the need for an internet connection. The focus of our guide is the 7 billion parameter version of CodeLLama, optimized for performance even on advanced hardware like the MacBook Pro M2 Max, ensuring instantaneous feedback.

How to use LLama Cody AI

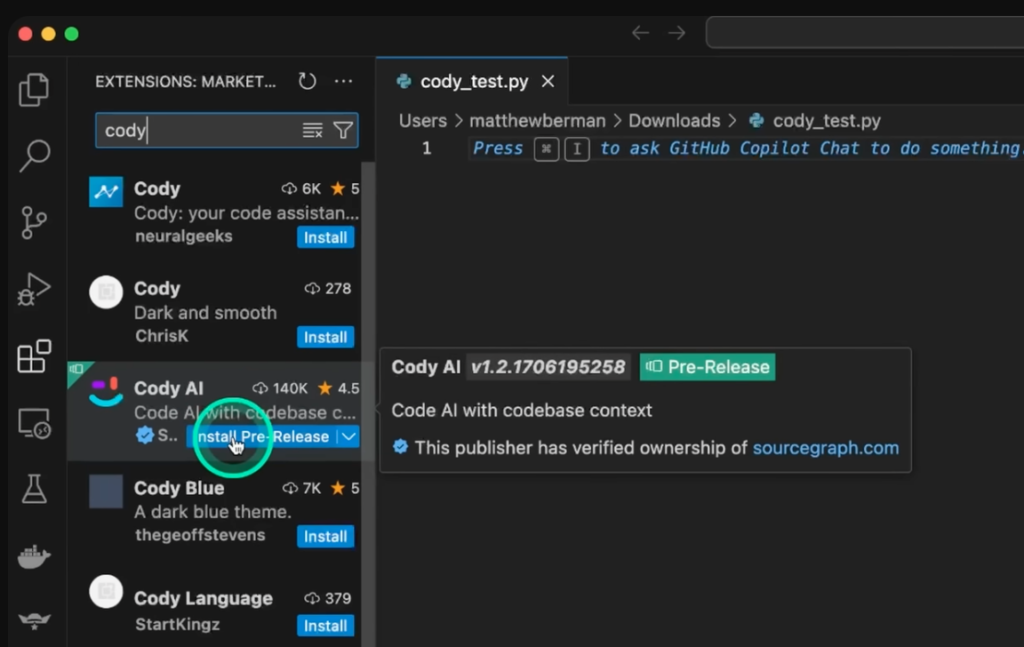

Cody, available as an extension for Visual Studio Code (VS Code), is your gateway to installing and utilizing CodeLLama. After downloading and installing VS Code from the official website, you’ll add the Cody extension. This process involves a simple search within the VS Code extensions marketplace, followed by the installation of Cody AI, which includes all necessary pre-releases.

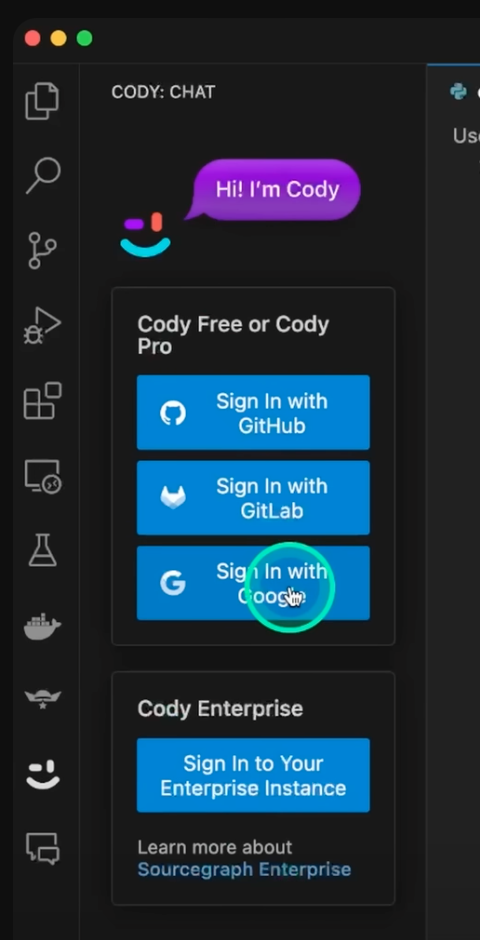

Once installed, a straightforward sign-in process using your preferred method, such as GitHub or Google, unlocks access to the extension.

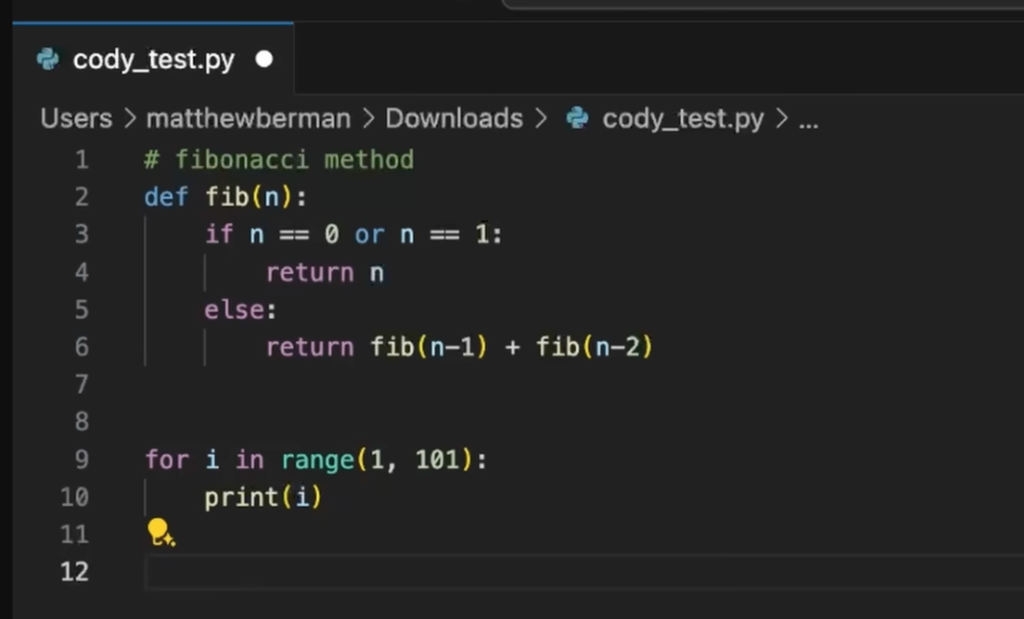

The heart of this setup is the local model, CodeLLama 7B, which you’ll download using the Llama tool. This step ensures that all autocomplete features run locally, without pinging external servers. After configuring Cody to utilize the Llama model for autocompletion, you’re ready to test its capabilities. Demonstrations of generating code for common tasks, like writing a Fibonacci method or outputting numbers 1 to 100, showcase the model’s speed and accuracy.

Cody isn’t just about autocompletion; it’s a comprehensive tool that aids in understanding and interacting with your code.

From adding documentation based on context to editing code with specific instructions, Cody enhances your coding experience. Its ability to generate unit tests, a task often seen as tedious by developers, underscores the practical benefits of incorporating Cody into your workflow.

Comparatively, Cody stands out from other coding assistants such as GitHub Copilot, thanks to its broader range of functionalities and the unique advantage of local model support. This exploration not only highlights the technical setup required to enjoy the benefits of CodeLLama but also emphasizes the potential for developers to maintain high productivity levels, irrespective of their internet connectivity.

Conclusion

In conclusion, the emergence of tools like CodeLLama represents a significant leap forward in the world of coding assistants. By offering an offline, locally powered solution, developers gain unprecedented flexibility and control over their coding environment. This guide aims to inspire and empower developers to leverage these advanced tools, enhancing their coding practices and project outcomes.

Read related articles: