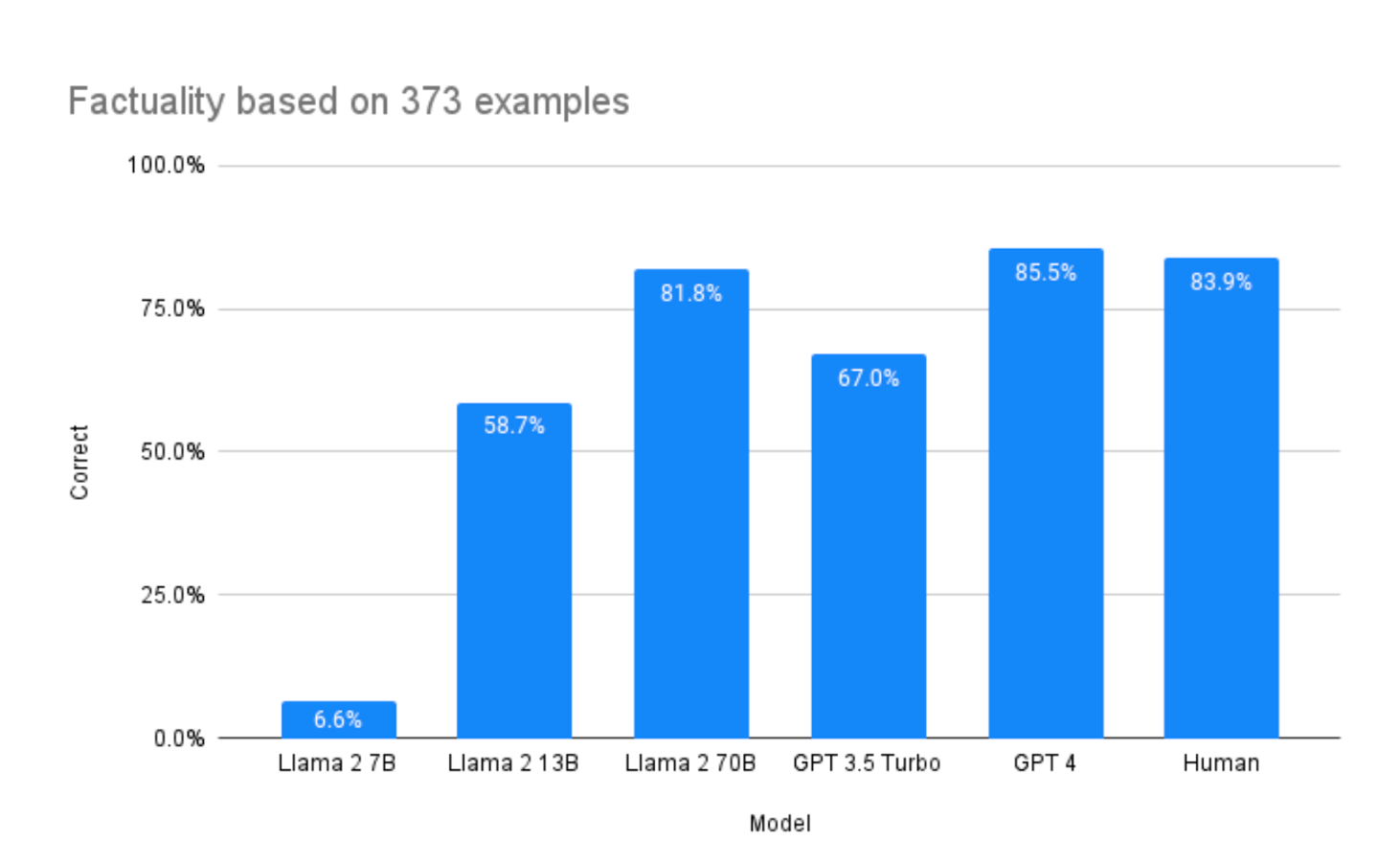

Llama 2 provides summaries with a factual accuracy comparable to GPT-4, but at 1/30th the cost. In this experiment, Anyscale team found Llama-2-70b is almost as strong at factuality as gpt-4, and considerably better than gpt-3.5-turbo.

The Anyscale Team used Anyscale Endpoints to compare Llama 2 7b, 13b, and 70b (chat-hf fine-tuned) against OpenAI gpt-3.5-turbo and gpt-4. They utilized a 3-way verified hand-labeled set of 373 news report statements and presented one correct and one incorrect summary for each. Each LLM was tasked with determining which statement was the factually correct summary.

Findings

The team identified two significant challenges during the experiment:

- Instruction Compliance: Larger models exhibited superior adherence to instructions. To discern the outputs of smaller LLMs, another LLM was employed to determine if the response was ‘A’ or ‘B’.

- Ordering Bias: The sequence of presenting ‘A’ and ‘B’ could influence preference towards the former due to its initial position. Swapping their positions allowed the team to observe this bias more clearly.

Upon addressing these challenges, the results were as follows:

- Human Accuracy: 84% (based on prior research)

- gpt-3.5-turbo: 67.0% (noted for severe ordering bias)

- gpt-4: 85.5%

- Llama-2-7b: Faced a drastic ordering bias, resulting in sub-random accuracy

- Llama-2-13b: 58.9%

- Llama-2-70b: 81.7%

For optimal factual summarization akin to human accuracy, either Llama-2-70b or gpt-4 is recommended. The latter surpassed human capability slightly, while Llama-2-70b fell a tad short. Both Llama-2-7b and Llama-2-13b demonstrated difficulties in adhering to the task’s guidelines. However, another LLM was employed to interpret their results, revealing order biases. It’s advisable to refrain from employing smaller Llamas or gpt-3.5-turbo for such tasks.

Additional observations included:

- Superior instruction-following capability in gpt-4 and gpt-3.5 compared to open-source counterparts.

- Pronounced ordering bias in gpt-3.5.

- A cost analysis showed Llama 2’s tokenization to be 19% longer than ChatGPT. Still, Llama 2 offered a cost advantage, being 30 times cheaper than GPT-4 for similar factual summarization.

Methodology

The evaluations were swiftly executed using Anyscale Endpoints. The integration of Pandas + Ray (particularly Ray Data) simplified the experimentation process. The entire experiment, remarkably, required just around 30 lines and approximately 15 minutes. Detailed findings are available in the linked IPython notebook.

Contributions

The team proposed a method to quantify pairwise ordering bias and introduced a potential solution—order swapping.

Recommendations:

- Be vigilant of ordering bias when prompting LLMs to select between options.

- Anyscale Endpoints is a commendable tool. Serverless Llama 2 greatly streamlines experimentation.

- For LLM experiments, Pandas proves to be an effective tool.

- To hasten experiments, consider integrating Ray.

Human-Like Accuracy

Both Llama-2-70b and gpt-4 have demonstrated factuality levels comparable to humans. In this specific experiment, gpt-4 and Llama-2-70b closely matched each other, indicating that the quality disparity between open-source and proprietary LLMs is narrowing. Notably, Llama-2-70b surpassed gpt-3.5-turbo in performance.

To address the query of whether Llama 2 can be trusted for accuracy, based on this study, the answer is affirmative. Its performance is nearly equivalent to human standards.

Bias in Sequencing

Significant ordering biases were observed in Llama-2-7b, gpt-3.5-turbo, and to a lesser degree, in Llama-2-13b. Such biases were absent in the more extensive models. Consequently, these models might not be ideal for creating summaries where a human-equivalent factuality is essential.

In upcoming research endeavors, the focus will be on devising strategies to mitigate ordering bias by meticulously designing the prompts.

Final Conclusions

In their study, team juxtaposed the factuality levels of both Open Source and Proprietary LLMs. Impressively, Llama-2-70b outperformed gpt-3.5-turbo and came close to matching the proficiency of humans and gpt-4. This establishes Llama-2-70b as a genuine contender against proprietary LLMs, such as those developed by OpenAI. Our findings suggest that using Llama-2-70b or gpt-4 offers a high likelihood of achieving human-comparable accuracy in factuality.

An essential takeaway from our research is the importance of closely analyzing data to unearth potential pitfalls, such as the ordering bias we observed in gpt-3.5. The adage, “if it sounds too good to be true, it probably is,” holds true for LLMs.

Furthermore, in terms of cost-effectiveness, Llama 2’s factuality in summaries is akin to GPT-4’s but comes at a price that is 30 times more economical.

Read related articles where we compare Llama with other LLMs: