Tag: Models

-

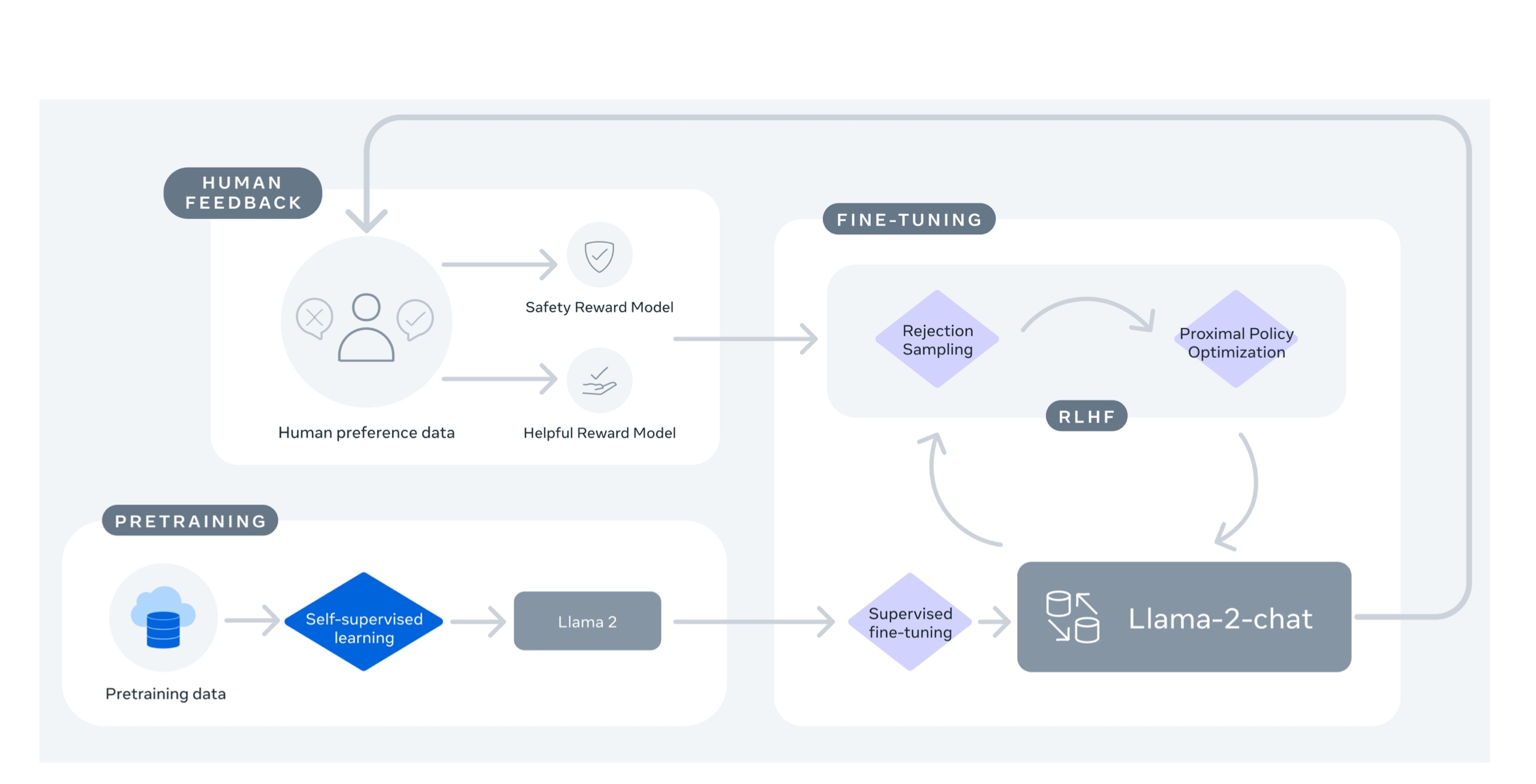

LLama 2 Training

Meta team unveiled Llama 2, which includes a series of pretrained and fine-tuned large language models (LLMs) with parameter counts ranging from 7 billion to 70 billion. Specifically, their fine-tuned variants, termed Llama 2-Chat, are tailored for dialogue applications. These models surpass most open-source chat models on several benchmarks they assessed. In this article we…

-

Llama 2 Model Card

Meta introduced the Llama 2 series of large language models with sizes varying from 7 billion to 70 billion parameters. Engineers designed these LLMs specifically for dialogue applications. In terms of performance, Llama-2-Chat excels over open-source chat models in many of the benchmarks we assessed. When gauged for helpfulness and safety in human reviews, they…

-

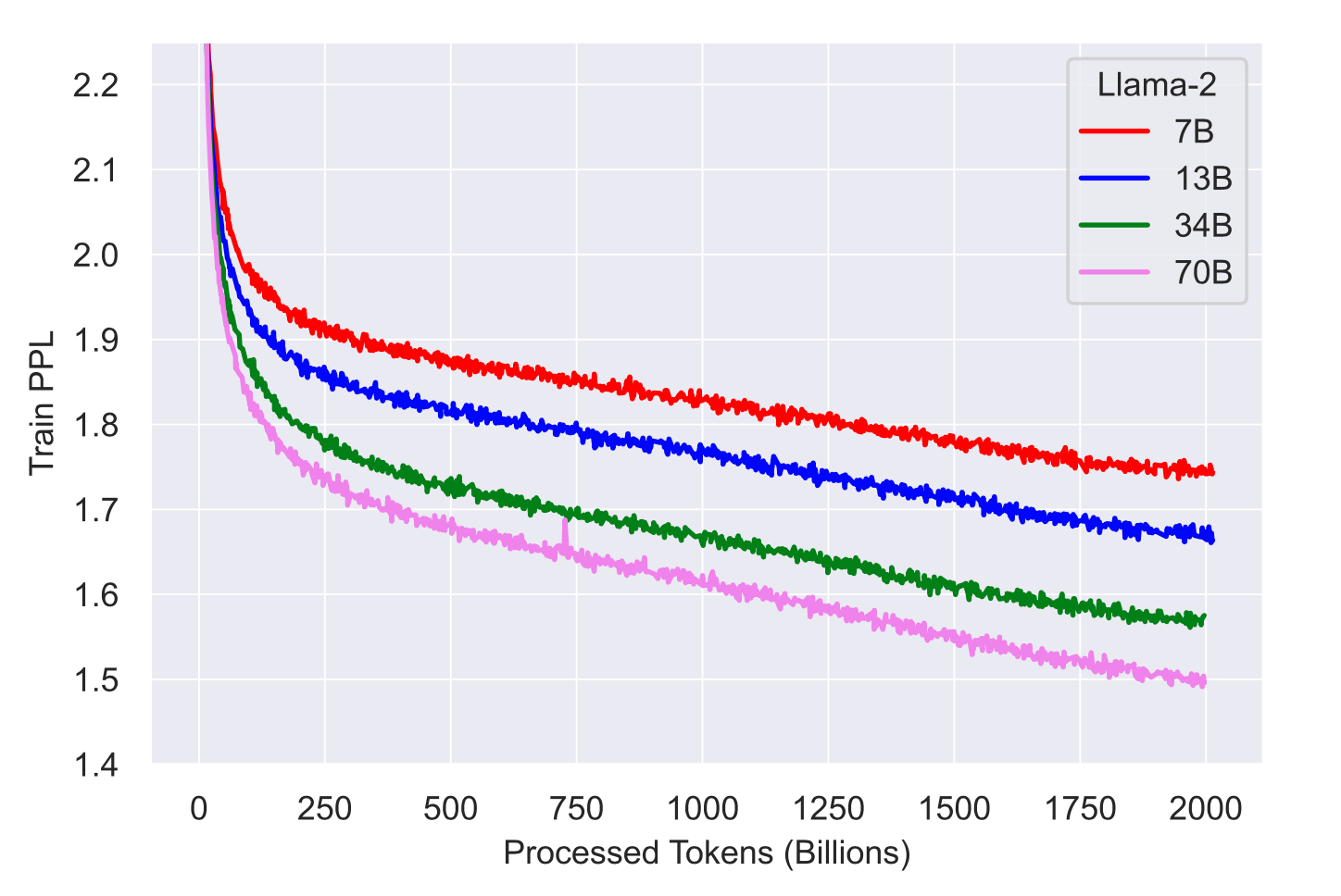

Llama 2 model sizes (7B, 13B, 70B)

All three currently available Llama 2 model sizes (7B, 13B, 70B) are trained on 2 trillion tokens and have double the context length of Llama 1. Llama 2 encompasses a series of generative text models that have been pretrained and fine-tuned, varying in size from 7 billion to 70 billion parameters. Meta’s specially fine-tuned models…