In the ongoing quest to create the most powerful and efficient AI models, a new champion has emerged from the ranks of the small guys. Microsoft’s Phi-3, a tiny AI model by industry standards, has been racking up impressive wins, including surpassing Meta’s much larger LLama-3 model in key benchmarks. This development suggests a shift in the AI landscape, where size might not be everything. Let’s delve deeper to see what this means for the future of artificial intelligence.

Microsoft’s Phi-3: A Closer Look

Recently, Meta introduced a new AI model called LLama-3. This model is open-source, which means anyone can use and modify it for free. LLama-3 has shown to be very powerful, even surpassing another well-known AI model, Google Gemini 1.5 Pro. This was a huge achievement for Meta, showcasing their advancement in AI technology.

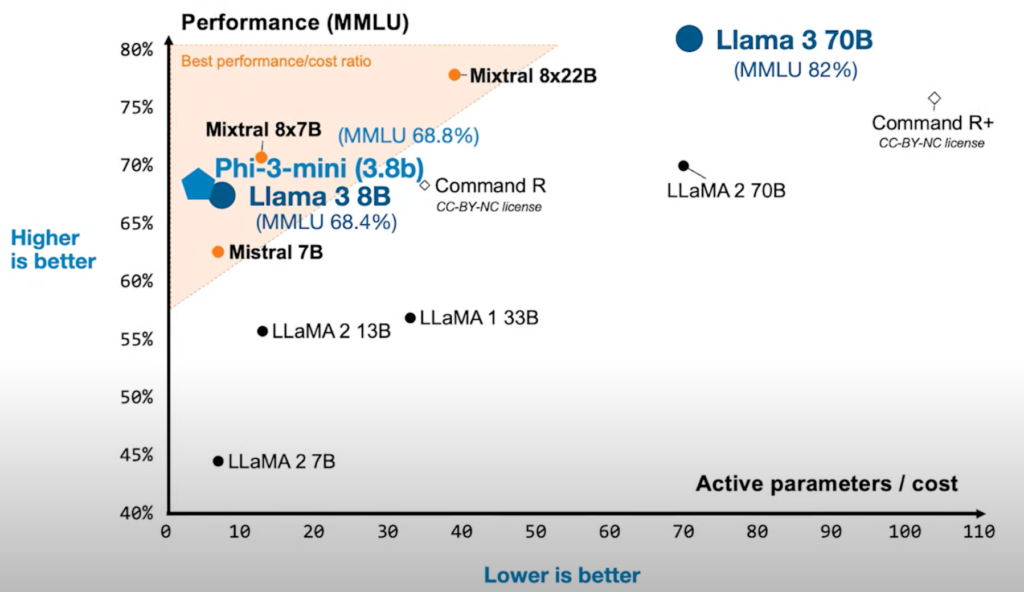

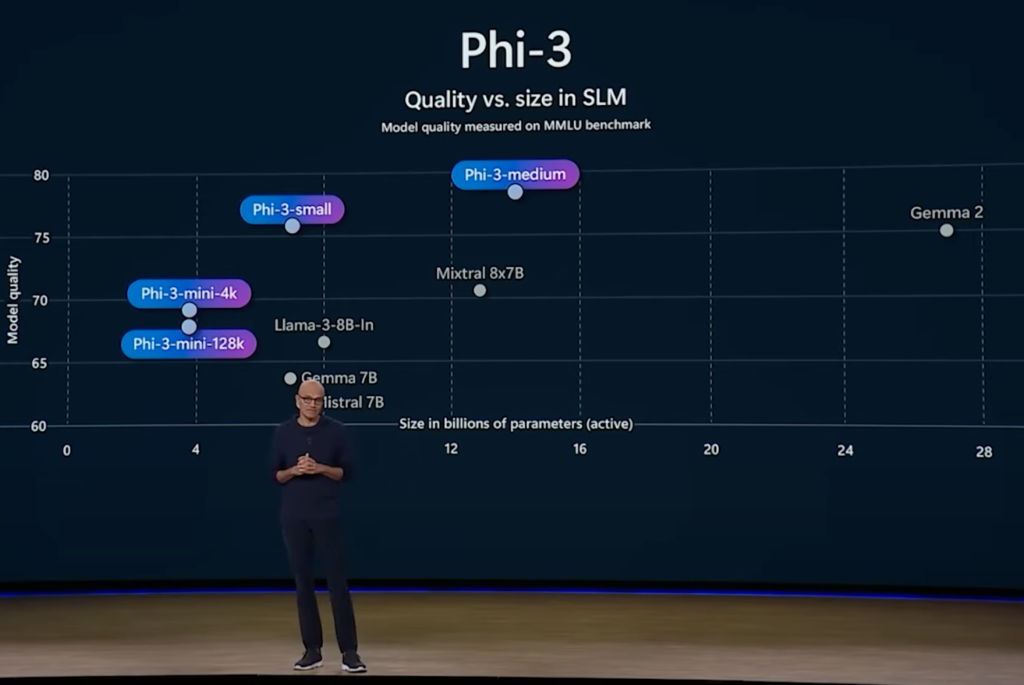

However, Microsoft has introduced an even more impressive AI model named Phi-3. Despite being Microsoft’s smallest AI model to date, Phi-3 outperforms Meta’s LLama-3 on various benchmark tests used to measure the performance of AI models.

These benchmarks compare how well AI models understand and generate text, solve problems, and perform other tasks.

Advantages of Smaller AI Models

One of the main benefits of smaller AI models like Phi-3 is that they are cheaper to operate. Large models require huge computing power, which translates to higher costs for running them. In contrast, smaller models use less computing power, making them more cost-effective. This is particularly advantageous for companies looking to integrate AI into their operations without incurring high expenses.

Companies can now achieve high-quality AI performance without the need for extensive hardware or significant financial investment. This affordability could lead to AI becoming more accessible and useful to everyone, enhancing operations and competitiveness.

Another significant advantage is that smaller models perform better on personal devices such as phones and laptops. Large models typically need powerful servers to run efficiently, but smaller models can operate smoothly on everyday devices. This makes advanced AI capabilities more accessible to individual users and small businesses.

Smaller AI models like Phi-3 are more suitable for companies with specific needs, often working with internal datasets that are relatively small. Smaller models can process these datasets more effectively, providing faster and more relevant results.

Phi-3’s Design and Capabilities

Microsoft’s work with Phi-2 and Phi-3 shows that the future of AI might not be about making bigger models but about making smarter, more efficient ones. Phi-3 has been created with a block structure and tokenizer that closely resembles Meta’s LLama model.

This design choice aims to make Phi-3 very user-friendly for the open-source community. Software libraries, algorithms, and other resources developed for LLama models can be readily applied to Phi-3, saving time and effort in exploring new applications and capabilities.

One of the standout features of Phi-3 models is their ability to deliver high-quality outputs. This means the answers and solutions they provide are detailed, accurate, and useful. A remarkable aspect of Phi-3 is its context length, which is as extensive as GPT-4 Turbo, reaching up to 128,000 tokens.

A longer context length allows the model to understand and respond to more complex queries and topics in greater detail. This capability means Phi-3 can handle large amounts of information at once, enabling it to provide more detailed and comprehensive responses. It also suggests that Phi-3 is highly advanced in its understanding of language and context, surpassing even larger models in some aspects.

Practical Applications and Future Prospects

Unlike bigger language models, Phi-3 is designed specifically for consumer devices like smartphones. This means it can run locally on these devices without needing constant internet connectivity or powerful external servers. This makes it a unique and practical choice for everyday users. Phi-3 is incredibly efficient in terms of memory usage. When reduced to 4 bits, it requires only 1.8 GB of memory, making it possible to run the model directly on smartphones and other consumer electronics.

Despite its small size, Phi-3 is powerful. For instance, on an iPhone 14 equipped with an A16 chip, Phi-3 can process over 12 tokens per second. This makes it suitable for a wide range of applications, including personal assistants on smartphones, real-time language translation apps, and apps requiring natural language understanding like note-taking apps, chatbots, and email clients.

Microsoft believes the success of Phi-3 is thanks to the data it was trained on. Phi-1 focused on learning to code, while Phi-2 started to understand reasoning. Phi-3 combines the strengths of both, excelling in coding and reasoning. The training approach involved filtering out irrelevant data and using a curriculum that gradually introduced more complex topics.

Conclusion

In the battle of AI models, Microsoft’s Phi-3 has shown that smaller can be mightier. With its impressive performance, cost-effectiveness, and versatility, Phi-3 is paving the way for a future where advanced AI capabilities are more accessible and widely used.

The AI community’s focus will likely be on making these models smarter and more efficient, maximizing their potential while keeping them small. This trend promises a future where AI benefits a broad range of applications and users, enhancing operations and competitiveness across industries.

Let us know what you think in the comment section below. For more interesting topics, make sure you explore our other articles.

Download Phi-3 mini on HuggingFace:

![]() microsoft/Phi-3-mini-128k-instruct

microsoft/Phi-3-mini-128k-instruct

Download LLama-3 8B on HuggingFace:

Read related articles: