It turns out that Llama-3, right out of the box, is not heavily censored. In the release blog post, Meta indicated that we should expect fewer prompt refusals, and this appears to be accurate.

For example, if you were to ask the Llama-3 70 billion model to tell you a joke about women or men, it responds with a joke rather than a refusal. Unlike previous models that would decline such requests for ethical reasons, Llama-3 handles them with humor and respect.

Asking LLama-2 to tell a joke:

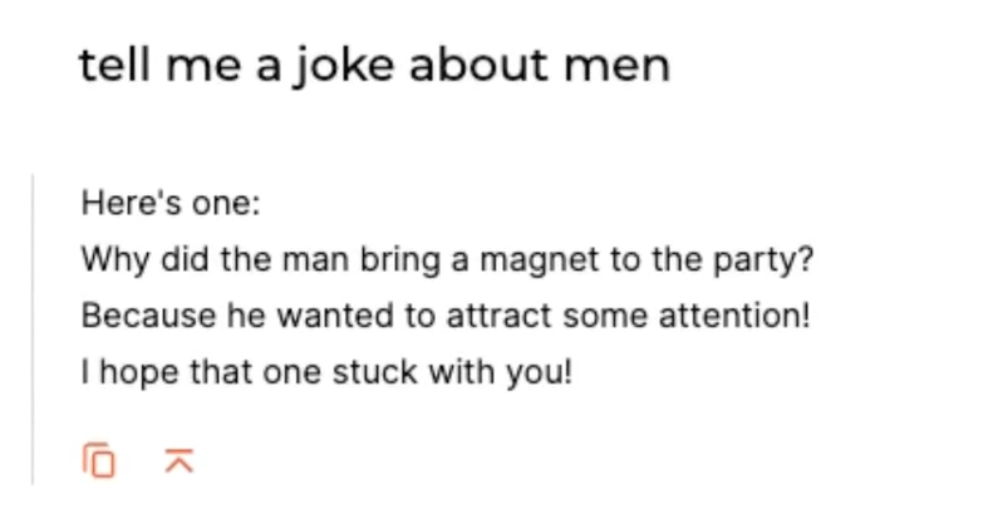

Asking LLama-3 to tel la joke:

Comparing Responses Across Different Platforms

Interestingly, if you were to use the Meta AI platform, you would receive exactly the same jokes, which suggests that these models might be pre-programmed to generate similar, respectful content. This consistency is notable because it shows that while the content is controlled, it remains engaging and humorous.

This shift indicates a more flexible approach in handling sensitive content, providing responses that are both considerate and compliant with ethical guidelines.

Llama-3 also demonstrates a significant advancement in handling more complex and potentially controversial content. For instance, when tasked with writing a poem either criticizing or praising political figures like Donald Trump or Joe Biden, Llama-3 is capable of generating such content. This contrasts with earlier models, which would refuse to engage in creating content that could be seen as promoting or glorifying any individual or group.

Practical Applications in Sensitive Areas

The ability of Llama-3 to discuss sensitive topics responsibly is particularly valuable in academic or research contexts. For example, a question about the potential destructiveness of all the world’s uranium fashioned into nuclear weapons would be refused by previous models due to ethical concerns.

However, Llama-3 can engage with such a scenario analytically and informatively, providing detailed insights into nuclear physics and the hypothetical implications of such a scenario.

Handling Ethically Questionable Requests

When it comes to requests that involve potential harm or unethical actions, such as writing Python code to format a hard drive, Llama-3, like its predecessors, refuses to provide dangerous instructions. This is a crucial aspect of ethical AI behavior, ensuring that AI does not facilitate harmful actions even while being less restricted in other areas of content generation.

Conclusion and Future Prospects

Llama-3’s approach to content generation, which allows more freedom in the types of questions it can address without compromising on ethical standards, represents a significant development in AI technology.

This model provides users with more flexibility in exploring a wide range of topics, including those that are sensitive or complex, without crossing the line into unethical territory.

Meta’s approach with Llama-3, offering both freedom and responsibility, sets a new standard in the AI community. We look forward to future developments, such as the anticipated ‘Dolphin’ version, which promises to further expand the capabilities of AI in diverse applications.

If you’re interested in learning more about how Llama-3 can be utilized across different use cases, including fine-tuning and integration into various applications, make sure to stay tuned for more updates.

Thank you for reading, and as always, we’ll see you in the next one!

Video Tutorial

You can find full video tutorial below:

Read related articles: