Blog

-

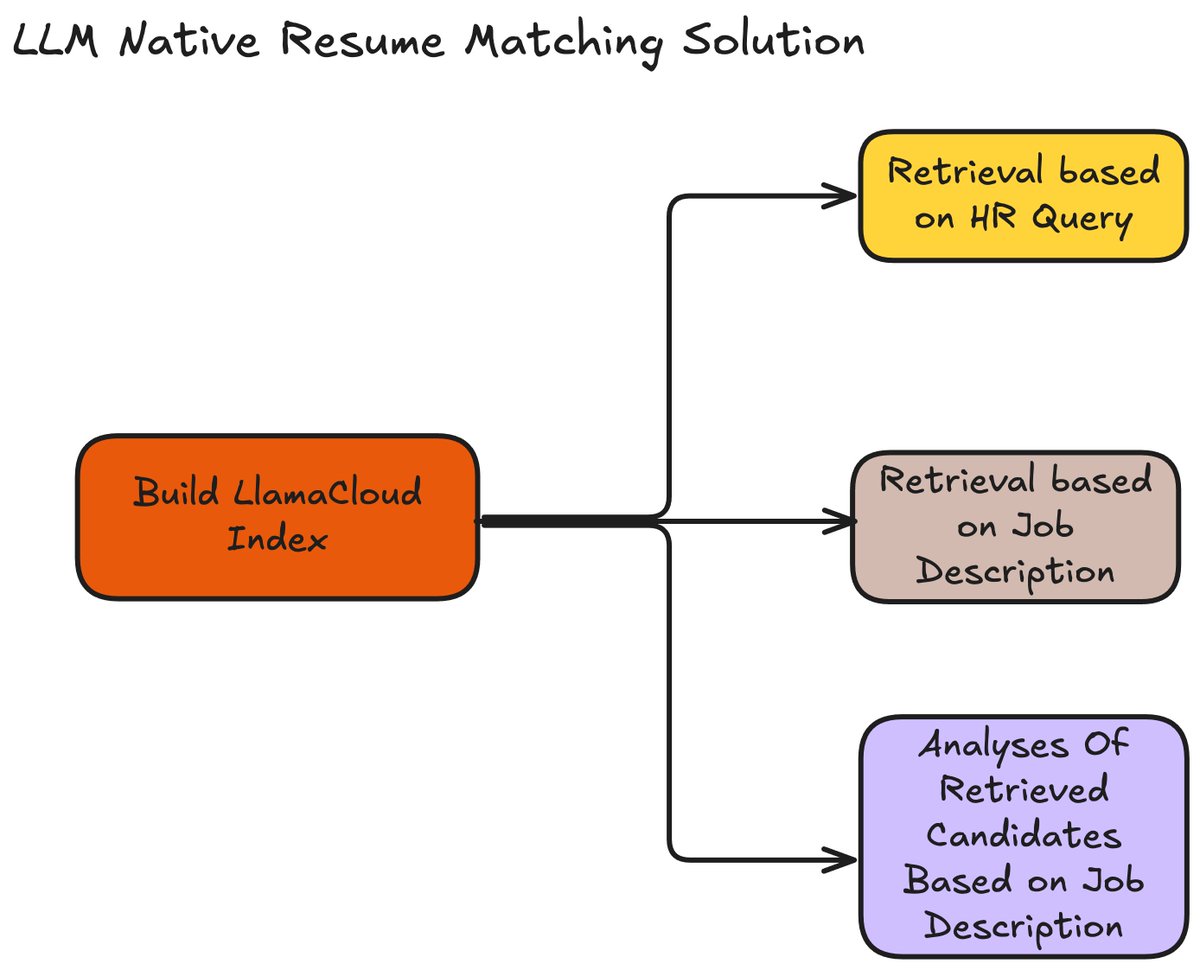

LLM-Native Resume Matching Solution with LlamaParse

LLM-Native Resume Matching Solution with LlamaParse and LlamaCloud. Traditional resume screening often depends on manual filtering and matching criteria, making it a slow and tedious process for recruiters. Thanks to @ravithejads, we now have an LLM-native solution that simplifies and speeds up the entire process: This complete end-to-end flow is powered by LlamaParse, LlamaCloud, and…

-

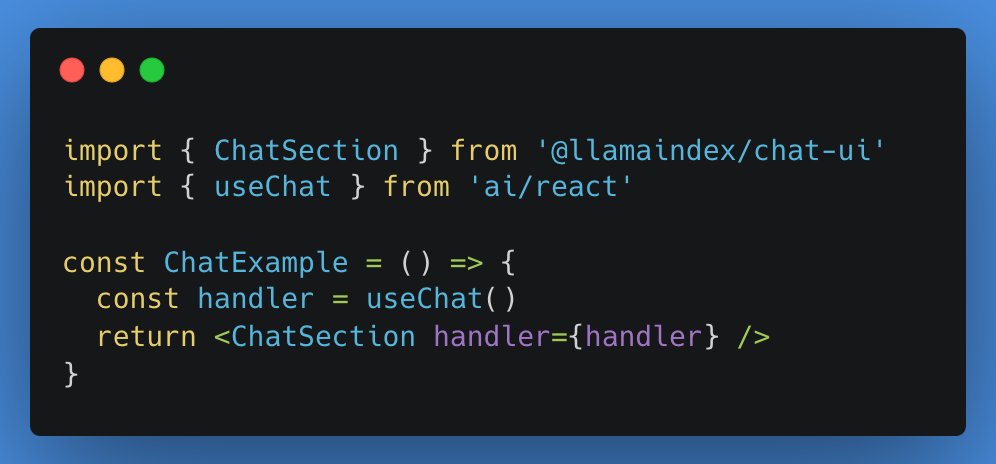

LLamaIndex Chat-UI

Build a chat UI for your LLM app in minutes with LlamaIndex chat-ui! This React component library offers: @vercel AI Key features: @llamaindex/chat-ui is a React component library that provides ready-to-use UI elements for building chat interfaces in LLM (Large Language Model) applications. This package is designed to streamline the development of chat-based user interfaces…

-

Llama-4

Ahmad Al-Dahle shared a glimpse into Meta’s massive AI project—training Llama 4 on a cluster with over 100,000 H100 GPUs! This scale is pushing AI boundaries and advancing both product capabilities and open-source contributions. Great to visit one of our data centers where we’re training Llama 4 models on a cluster bigger than 100K H100’s!…

-

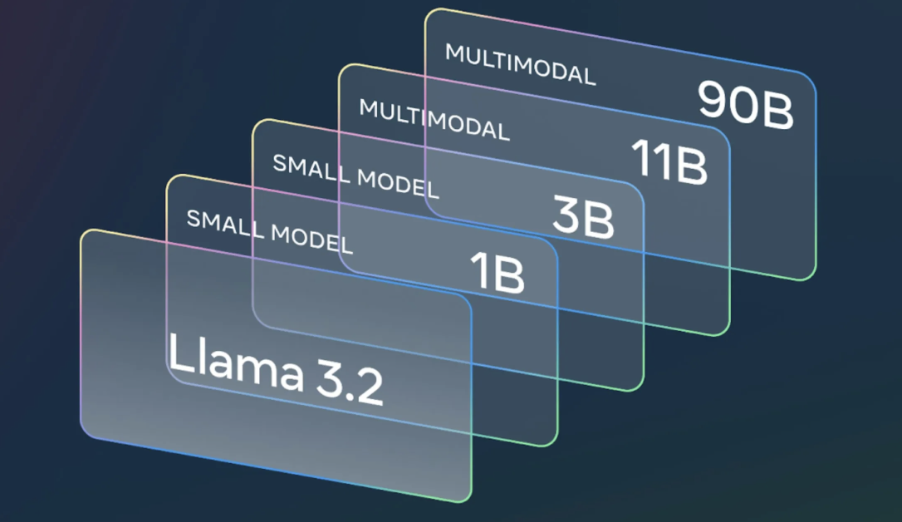

Llama 3.2

The two largest models in the Llama 3.2 collection, the 11B and 90B, are designed for image reasoning tasks such as document-level comprehension, including interpreting charts and graphs, image captioning, and visual grounding tasks like identifying objects in images based on natural language prompts. For instance, someone could ask which month in the previous year…

-

WordLLama

WordLlama is a utility for NLP and word embedding that repurposes components from large language models (LLMs) to generate efficient and compact word representations, similar to GloVe, Word2Vec, or FastText. It starts by extracting the token embedding codebook from a state-of-the-art LLM (e.g., LLaMA3 70B) and trains a small, context-free model within a general-purpose embedding…

-

How to Run Llama 3.1 Locally

Llama 3.1 is the latest large language model (LLM) developed by Meta AI, following in the footsteps of popular models like ChatGPT. This article will guide you through what Llama 3.1 is, why you might want to use it, how to run it locally on Windows, and some of its potential applications. Let’s dive in…

-

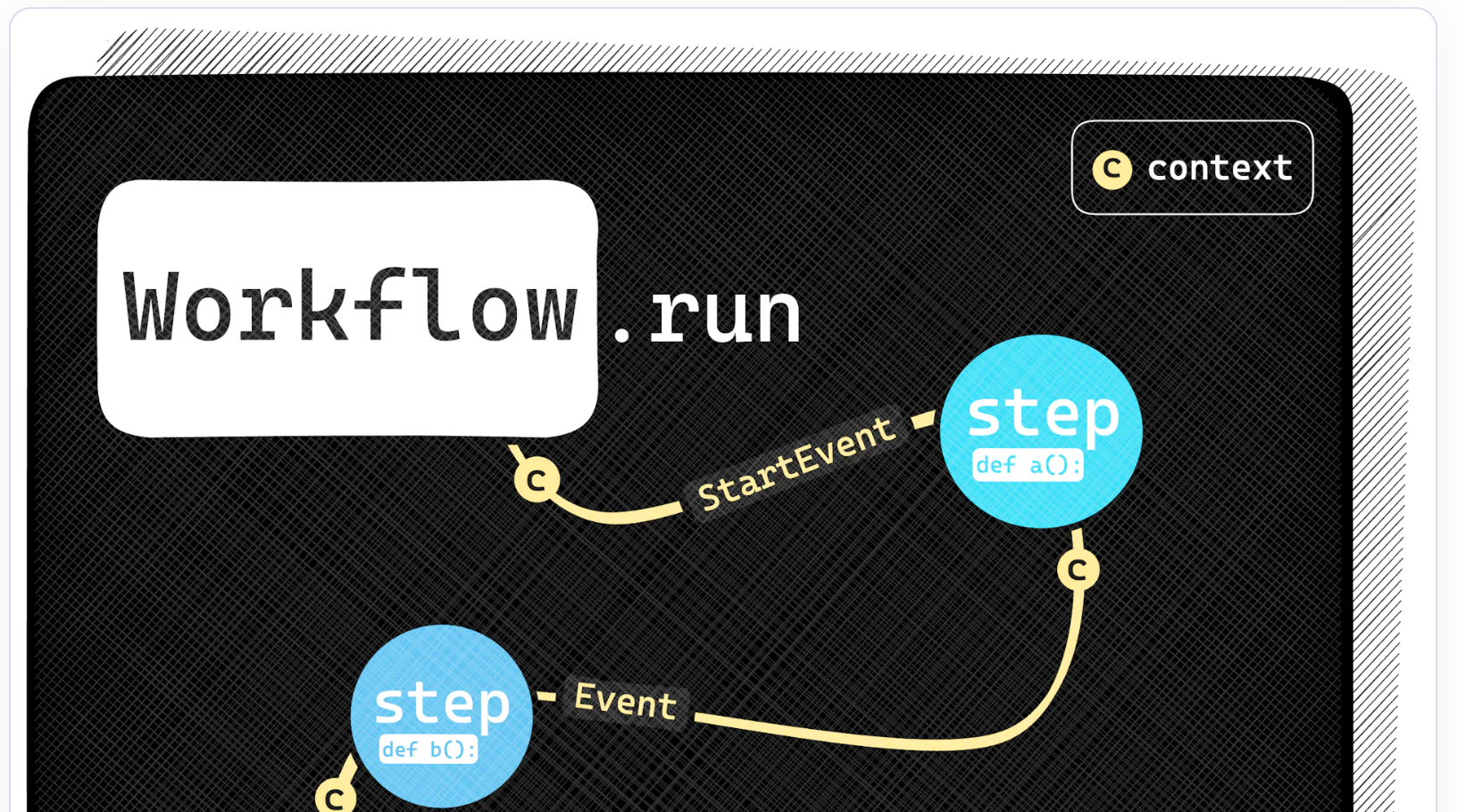

LLamaIndex Workflows

Today, the LLamaIndex Team introduced Workflows—a new event-driven way of building multi-agent applications. By modeling each agent as a component that subscribes to and emits events, you can build complex orchestration in a readable, Pythonic manner that leverages batching, async, and streaming. Limitations of the Graph/Pipeline-Based Approach The path to this innovation wasn’t immediate. Earlier…

-

LlamaCloud

Access control over data is a big requirement for any enterprise building LLM applications. LlamaCloud makes it easy to set this up. LlamaCloud lets you natively index ACLs through our data connectors – for instance, we directly load in the user/org-level permissions as metadata in Sharepoint It’s also easy to inject custom metadata through source…