Meta introduces Purple Llama, a project for open trust and safety tools. It aims to help developers use generative AI responsibly. The project aligns with best practices from Meta’s Responsible Use Guide. Meta’s first release includes CyberSec Eval, a cybersecurity benchmark for LLMs.

They also introduce Llama Guard, a safety classifier for filtering. Llama Guard is designed for easy deployment. Meta plans to collaborate with the AI Alliance and major tech companies. Partners include AMD, AWS, Google Cloud, and others. The goal is to enhance and share these tools with the open-source community.

Introduction

Generative AI is revolutionizing technology. It enables AI conversations, realistic images, and document summaries. Over 100 million Llama model downloads fuel this innovation. Open models are key to this progress.

Collaboration in safety builds trust among developers. This wave of innovation needs responsible AI research and contributions. AI challenges can’t be solved alone. The goal is to equalize trust and safety in AI development.

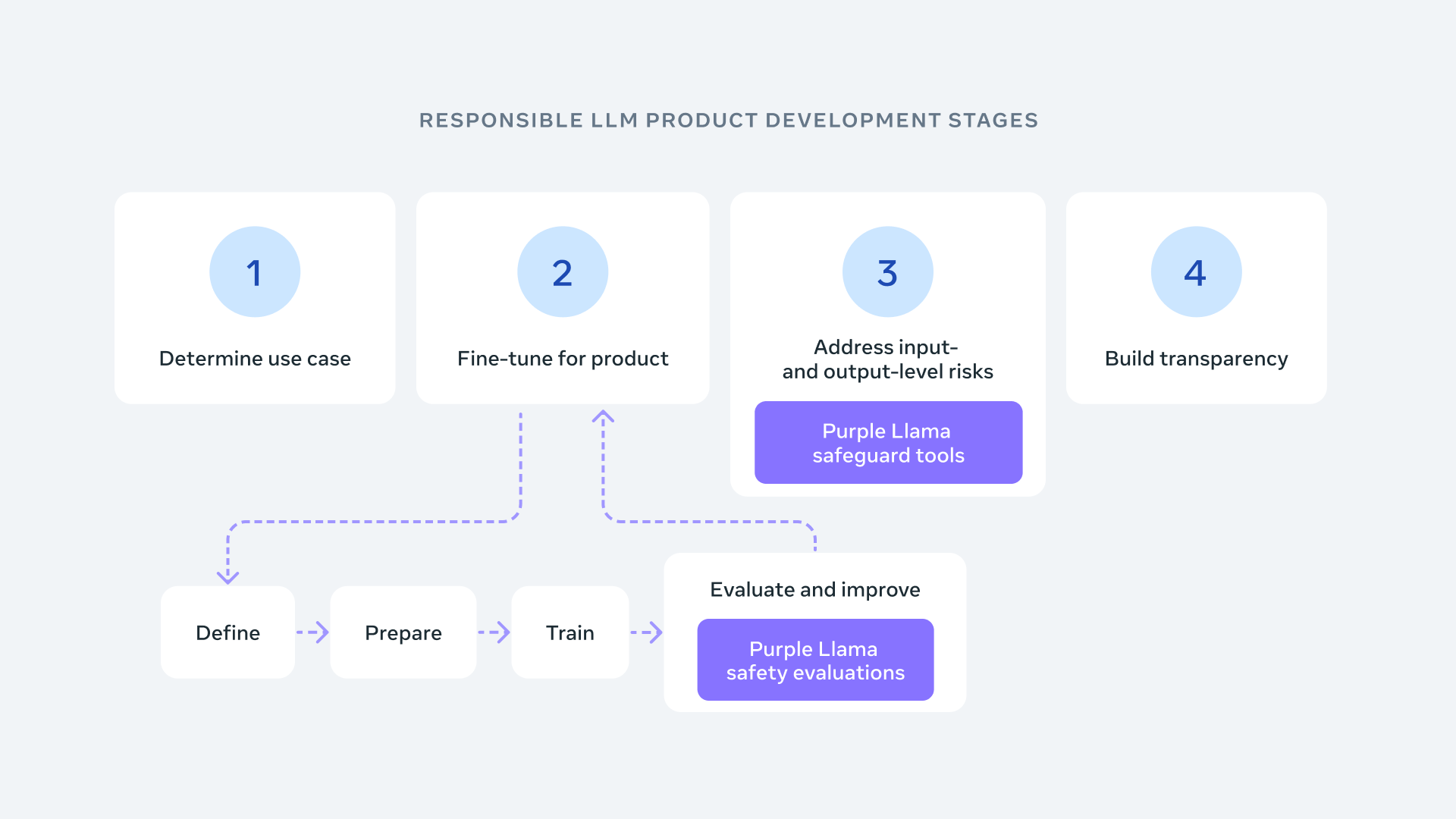

Meta announces Purple Llama, a comprehensive project. It will gather tools and evaluations for responsible AI building. The first release focuses on cybersecurity and input/output safety. More tools will follow soon.

Purple Llama’s components will have permissive licenses. This supports both research and commercial use. It’s a step towards community collaboration. The aim is to standardize trust and safety in generative AI development.

Input/Output Safeguards

In the Llama 2 Guide, Meta advises filtering all LLM inputs and outputs. This aligns with content guidelines for each application.

To aid this, Meta introduces Llama Guard. It’s a free model that excels in common benchmarks. Llama Guard offers developers a pretrained tool to prevent risky outputs.

Meta commits to open, transparent science. They’re sharing Llama Guard’s methodology and performance details. The model, trained on public datasets, detects various risky contents. It’s designed for diverse developer needs.

The goal is to let developers tailor Llama Guard for specific uses. This will ease the adoption of best practices. It aims to enhance the open AI ecosystem.

Why LLama is Purple?

Meta recognizes the need for both offensive and defensive strategies in generative AI. This approach, known as purple teaming, combines attack (red team) and defense (blue team) tactics. It’s a collaborative method to assess and mitigate risks in AI. Therefore, Meta’s commitment to Purple Llama is all-encompassing. This project will address the full spectrum of challenges in generative AI.

To explore Llama 2 further, visit the Llama website. It offers a quick start guide and answers to frequent questions. This resource is ideal for understanding Llama 2 better.

For insights on using LLMs in product development, research best practices. This knowledge is crucial for responsible and effective AI integration.

Upcoming NeurIPs will feature demos by Together.AI and Anyscale. These partners will showcase practical applications of Llama 2. Attending these demos can provide valuable hands-on learning.

Read related articles: