This cookbook shows you how to use LlamaParse to parse any document with the multimodal capabilities of Claude Sonnet 3.5.

LlamaParse allows you to plug in external, multimodal model vendors for parsing – we handle the error correction, validation, and scalability/reliability for you.

Setup

Download the data. Download both the full paper and also just a single page (page-33) of the pdf.

Swap in data/llama2-p33.pdf for data/llama2.pdf in the code blocks below if you want to save on parsing tokens.

An image of this page is shown below.

import nest_asyncio nest_asyncio.apply()

!wget "https://arxiv.org/pdf/2307.09288" -O data/llama2.pdf !wget "https://www.dropbox.com/scl/fi/wpql661uu98vf6e2of2i0/llama2-p33.pdf?rlkey=64weubzkwpmf73y58vbmc8pyi&st=khgx5161&dl=1" -O data/llama2-p33.pdf

--2024-07-11 23:44:38-- https://arxiv.org/pdf/2307.09288 Resolving arxiv.org (arxiv.org)... 151.101.195.42, 151.101.131.42, 151.101.3.42, ... Connecting to arxiv.org (arxiv.org)|151.101.195.42|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 13661300 (13M) [application/pdf] Saving to: ‘data/llama2.pdf’ data/llama2.pdf 100%[===================>] 13.03M 69.3MB/s in 0.2s 2024-07-11 23:44:38 (69.3 MB/s) - ‘data/llama2.pdf’ saved [13661300/13661300]

Initialize LlamaParse

Initialize LlamaParse in multimodal mode, and specify the vendor.

NOTE: optionally you can specify the Anthropic API key. If you do so you will be charged our base LlamaParse price of 0.3c per page. If you don’t then you will be charged 6c per page, as we will make the calls to Claude for you.

from llama_index.core.schema import TextNode

from typing import List

import json

def get_text_nodes(json_list: List[dict]):

text_nodes = []

for idx, page in enumerate(json_list):

text_node = TextNode(text=page["md"], metadata={"page": page["page"]})

text_nodes.append(text_node)

return text_nodes

def save_jsonl(data_list, filename):

"""Save a list of dictionaries as JSON Lines."""

with open(filename, "w") as file:

for item in data_list:

json.dump(item, file)

file.write("\n")

def load_jsonl(filename):

"""Load a list of dictionaries from JSON Lines."""

data_list = []

with open(filename, "r") as file:

for line in file:

data_list.append(json.loads(line))

return data_list

from llama_parse import LlamaParse

parser = LlamaParse(

result_type="markdown",

use_vendor_multimodal_model=True,

vendor_multimodal_model_name="anthropic-sonnet-3.5",

# invalidate_cache=True

)

json_objs = parser.get_json_result("./data/llama2.pdf")

# json_objs = parser.get_json_result("./data/llama2-p33.pdf")

json_list = json_objs[0]["pages"]

docs = get_text_nodes(json_list)

Started parsing the file under job_id 811a29d8-8bcd-4100-bee3-6a83fbde1697

# Optional: Save save_jsonl([d.dict() for d in docs], "docs.jsonl")

# Optional: Load

from llama_index.core import Document

docs_dicts = load_jsonl("docs.jsonl")

docs = [Document.parse_obj(d) for d in docs_dicts]

Setup GPT-4o baseline

For comparison, we will also parse the document using GPT-4o (3c per page).

from llama_parse import LlamaParse

parser_gpt4o = LlamaParse(

result_type="markdown",

use_vendor_multimodal_model=True,

vendor_multimodal_model="openai-gpt4o",

# invalidate_cache=True

)

json_objs_gpt4o = parser_gpt4o.get_json_result("./data/llama2.pdf")

# json_objs_gpt4o = parser.get_json_result("./data/llama2-p33.pdf")

json_list_gpt4o = json_objs_gpt4o[0]["pages"]

docs_gpt4o = get_text_nodes(json_list_gpt4o)

Started parsing the file under job_id 04c69ecc-e45d-4ad9-ba72-3045af38268b

# Optional: Save save_jsonl([d.dict() for d in docs_gpt4o], "docs_gpt4o.jsonl")

# Optional: Load

from llama_index.core import Document

docs_gpt4o_dicts = load_jsonl("docs_gpt4o.jsonl")

docs_gpt4o = [Document.parse_obj(d) for d in docs_gpt4o_dicts]

View Results

Let’s visualize the results along with the original document page.

We see that Sonnet is able to extract complex visual elements like graphs in way more detail!

NOTE: If you’re using llama2-p33, just use docs[0]

# using Sonnet-3.5 print(docs[32].get_content(metadata_mode="all"))

page: 33

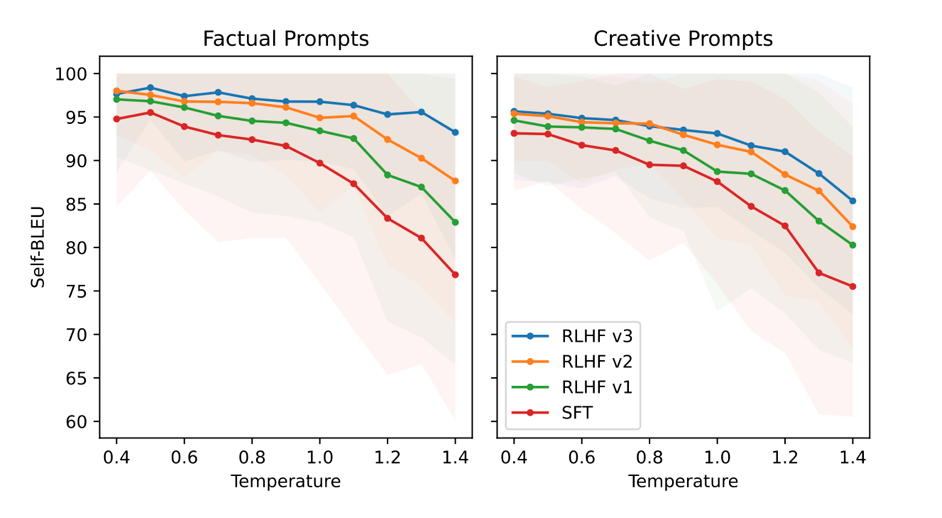

| Temperature | RLHF v3 | RLHF v2 | RLHF v1 | SFT |

|-------------|---------|---------|---------|-----|

| 0.4 | 98 | 98 | 97 | 95 |

| 0.6 | 97 | 97 | 95 | 94 |

| 0.8 | 97 | 96 | 94 | 92 |

| 1.0 | 96 | 94 | 92 | 89 |

| 1.2 | 95 | 92 | 88 | 83 |

| 1.4 | 94 | 89 | 83 | 77 |

Figure 21: RLHF learns to adapt the temperature with regard to the type of prompt. Lower Self-BLEU corresponds to more diversity: RLHF eliminates diversity in responses to factual prompts but retains more diversity when generating responses to creative prompts. We prompt each model with a diverse set of 10 creative and 10 factual instructions and sample 25 responses. This is repeated for the temperatures T ∈ {k/10 | k ∈ N : 1 ≤ k ≤ 15}. For each of the 25 responses we compute the Self-BLEU metric and report the mean and standard deviation against the temperature.

| Date: 01/01/2023 | Year: 2023 | Year: 852 |

|------------------|------------|-----------|

| Cutting knowledge: 01/01/1940 | | |

| Who won the 2nd World War? | Is the Earth flat or round? | Is the Earth flat or round? |

| I'm not sure what you're referring to, as my knowledge stopped in 1940. | The Earth is round. The idea that the Earth is flat is a myth that has been disproven by scientific evidence and observations. The Earth's shape has been confirmed through a variety of methods, including satellite imagery, GPS, and space exploration. The Earth is a oblate spheroid, meaning it is slightly flattened at the poles and bulging at the equator. | In 852, the Earth was believed to be flat by many people. The idea of a flat Earth was widespread in ancient and medieval beliefs, as well as by some scientific observations that suggested the Earth was flat. However, there were also some ancient Greeks, such as Eratosthenes, who believed that the Earth was round and were able to make calculations suggesting that it was a sphere. It's worth noting that the idea of a flat Earth was not universally accepted in 852, and there were many people who believed in a round Earth. Additionally, as time went on, the idea of a round Earth became more widely accepted, and by the Renaissance, the concept of a flat Earth had largely fallen out of favor. |

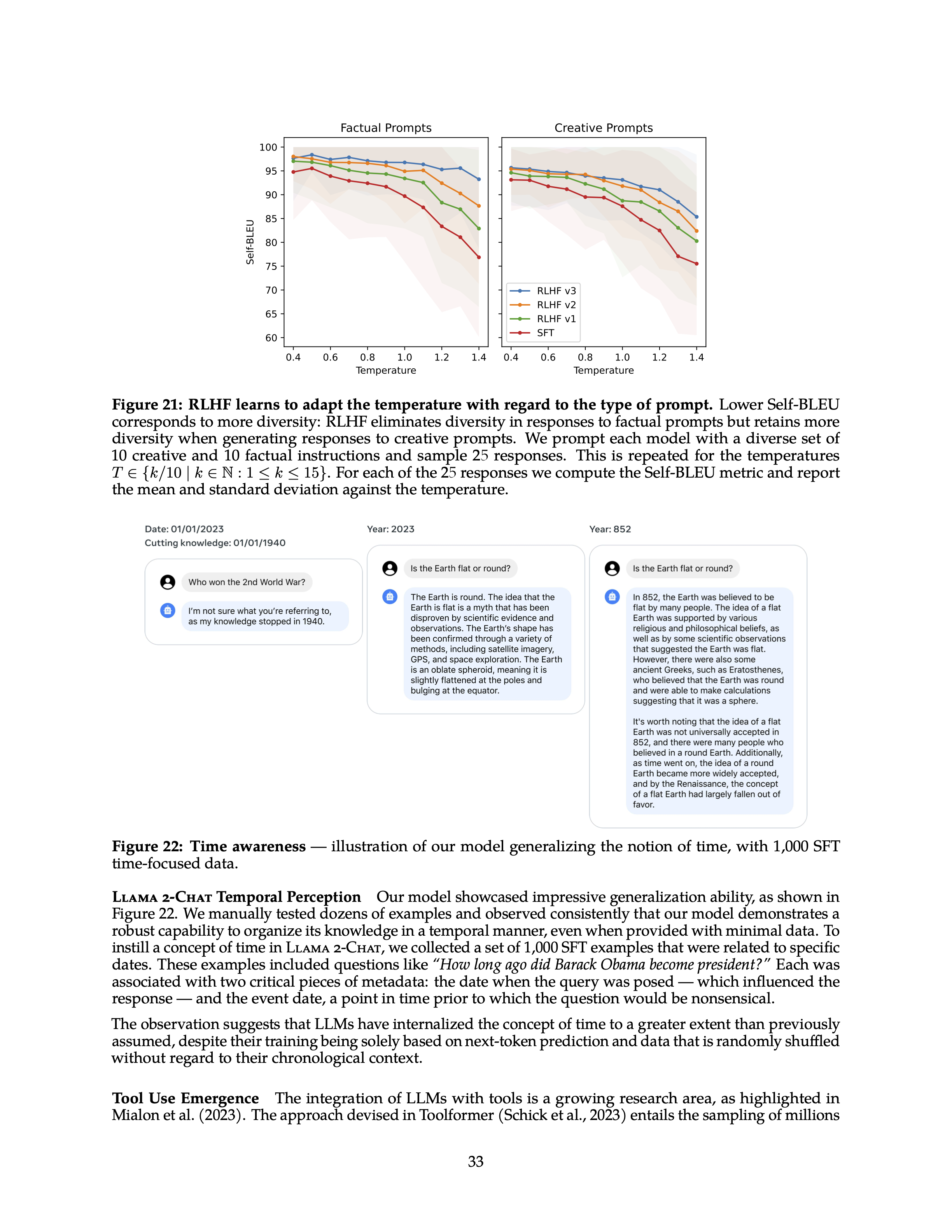

Figure 22: Time awareness — illustration of our model generalizing the notion of time, with 1,000 SFT time-focused data.

LLAMA 2-CHAT Temporal Perception Our model showcased impressive generalization ability, as shown in Figure 22. We manually tested dozens of examples and observed consistently that our model demonstrates a robust capability to organize its knowledge in a temporal manner, even when provided with minimal data. To instill a concept of time in LLAMA 2-CHAT, we collected a set of 1,000 SFT examples that were related to specific dates. These examples included questions like "How long ago did Barack Obama become president?" Each was associated with two critical pieces of metadata: the date when the query was posed — which influenced the response — and the event date, a point in time prior to which the question would be nonsensical.

The observation suggests that LLMs have internalized the concept of time to a greater extent than previously assumed, despite their training being solely based on next-token prediction and data that is randomly shuffled without regard to their chronological context.

Tool Use Emergence The integration of LLMs with tools is a growing research area, as highlighted in Mialon et al. (2023). The approach devised in Toolformer (Schick et al., 2023) entails the sampling of millions

33

# using GPT-4o print(docs_gpt4o[32].get_content(metadata_mode="all"))

page: 33

# Figure 21: RLHF learns to adapt the temperature with regard to the type of prompt.

Lower Self-BLEU corresponds to more diversity: RLHF eliminates diversity in responses to factual prompts but retains more diversity when generating responses to creative prompts. We prompt each model with a diverse set of 10 creative and 10 factual instructions and sample 25 responses. This is repeated for the temperatures \( T \in \{k/10 | k \in \{1:1:15\}\). For each of the 25 responses we compute the Self-BLEU metric and report the mean and standard deviation against the temperature.

| Temperature | Factual Prompts | Creative Prompts |

|-------------|-----------------|------------------|

| 0.4 | | |

| 0.6 | | |

| 0.8 | | |

| 1.0 | | |

| 1.2 | | |

| 1.4 | | |

| Model | RLHF v3 | RLHF v2 | RLHF v1 | SFT |

|--------|---------|---------|---------|-----|

| Self-BLEU | | | | |

# Figure 22: Time awareness

Illustration of our model generalizing the notion of time, with 1,000 SFT time-focused data.

## Llama 2-Chat Temporal Perception

Our model showcased impressive generalization ability, as shown in Figure 22. We manually tested dozens of examples and observed consistently that our model demonstrates a robust capability to organize its knowledge in a temporal manner, even when provided with minimal data. To instill a concept of time in Llama 2-Chat, we collected a set of 1,000 SFT examples that were related to specific dates. These examples included questions like "How long ago did Barack Obama become president?" Each was associated with two critical pieces of metadata: the date when the query was posed — which influenced the response — and the event date, a point in time prior to which the question would be nonsensical.

The observation suggests that LLMs have internalized the concept of time to a greater extent than previously assumed, despite their training being solely based on next-token prediction and data that is randomly shuffled without regard to their chronological context.

## Tool Use Emergence

The integration of LLMs with tools is a growing research area, as highlighted in Mialon et al. (2023). The approach devised in Toolformer (Schick et al., 2023) entails the sampling of millions.

---

### Example Prompts and Responses

| Date: 01/01/2023 | Year: 2023 | Year: 852 |

|------------------|------------|-----------|

| **Who won the 2nd World War?** | **Is the Earth flat or round?** | **Is the Earth flat or round?** |

| I'm not sure what you're referring to, as my knowledge stopped in 1940. | The Earth is round. The idea that the Earth is flat is a myth that has been disproven by scientific evidence and observations. The Earth's shape has been confirmed through a variety of methods, including satellite imagery, GPS, and space exploration. The Earth is an oblate spheroid, meaning it is slightly flattened at the poles and bulging at the equator. | In 852, the Earth was believed to be flat by many people. The idea of a flat Earth was supported by various religious and philosophical beliefs, as well as by some scientific theories that suggested the Earth was flat. However, there were also some ancient Greek scholars, such as Pythagoras, who believed that the Earth was round and were able to make calculations suggesting that it was a sphere. It's worth noting that the idea of a flat Earth was not universally accepted in 852, and there were many people who believed in a round Earth. Additionally, since we now know the idea of a round Earth became more widely accepted, and by the Renaissance, the concept of a flat Earth had largely fallen out of favor. |

---

Page 33

Setup RAG Pipeline

These parsing capabilities translate to great RAG performance as well. Let’s setup a RAG pipeline over this data.

(we’ll use GPT-4o from OpenAI for the actual text synthesis step).

from llama_index.core import Settings from llama_index.llms.openai import OpenAI from llama_index.embeddings.openai import OpenAIEmbedding Settings.llm = OpenAI(model="gpt-4o") Settings.embed_model = OpenAIEmbedding(model="text-embedding-3-large")

# from llama_index.core import SummaryIndex from llama_index.core import VectorStoreIndex from llama_index.llms.openai import OpenAI index = VectorStoreIndex(docs) query_engine = index.as_query_engine(similarity_top_k=5) index_gpt4o = VectorStoreIndex(docs_gpt4o) query_engine_gpt4o = index_gpt4o.as_query_engine(similarity_top_k=5)

query = "Tell me more about all the values for each line in the 'RLHF learns to adapt the temperature with regard to the type of prompt' graph " response = query_engine.query(query) response_gpt4o = query_engine_gpt4o.query(query)

print(response)

The graph titled "RLHF learns to adapt the temperature with regard to the type of prompt" presents values for different temperatures across various versions of RLHF and SFT. The values are as follows: - **Temperature 0.4:** - RLHF v3: 98 - RLHF v2: 98 - RLHF v1: 97 - SFT: 95 - **Temperature 0.6:** - RLHF v3: 97 - RLHF v2: 97 - RLHF v1: 95 - SFT: 94 - **Temperature 0.8:** - RLHF v3: 97 - RLHF v2: 96 - RLHF v1: 94 - SFT: 92 - **Temperature 1.0:** - RLHF v3: 96 - RLHF v2: 94 - RLHF v1: 92 - SFT: 89 - **Temperature 1.2:** - RLHF v3: 95 - RLHF v2: 92 - RLHF v1: 88 - SFT: 83 - **Temperature 1.4:** - RLHF v3: 94 - RLHF v2: 89 - RLHF v1: 83 - SFT: 77 These values indicate how the Self-BLEU metric, which measures diversity, changes with temperature for different versions of RLHF and SFT. Lower Self-BLEU corresponds to more diversity in the responses.

print(response.source_nodes[4].get_content())

| Temperature | RLHF v3 | RLHF v2 | RLHF v1 | SFT |

|-------------|---------|---------|---------|-----|

| 0.4 | 98 | 98 | 97 | 95 |

| 0.6 | 97 | 97 | 95 | 94 |

| 0.8 | 97 | 96 | 94 | 92 |

| 1.0 | 96 | 94 | 92 | 89 |

| 1.2 | 95 | 92 | 88 | 83 |

| 1.4 | 94 | 89 | 83 | 77 |

Figure 21: RLHF learns to adapt the temperature with regard to the type of prompt. Lower Self-BLEU corresponds to more diversity: RLHF eliminates diversity in responses to factual prompts but retains more diversity when generating responses to creative prompts. We prompt each model with a diverse set of 10 creative and 10 factual instructions and sample 25 responses. This is repeated for the temperatures T ∈ {k/10 | k ∈ N : 1 ≤ k ≤ 15}. For each of the 25 responses we compute the Self-BLEU metric and report the mean and standard deviation against the temperature.

| Date: 01/01/2023 | Year: 2023 | Year: 852 |

|------------------|------------|-----------|

| Cutting knowledge: 01/01/1940 | | |

| Who won the 2nd World War? | Is the Earth flat or round? | Is the Earth flat or round? |

| I'm not sure what you're referring to, as my knowledge stopped in 1940. | The Earth is round. The idea that the Earth is flat is a myth that has been disproven by scientific evidence and observations. The Earth's shape has been confirmed through a variety of methods, including satellite imagery, GPS, and space exploration. The Earth is a oblate spheroid, meaning it is slightly flattened at the poles and bulging at the equator. | In 852, the Earth was believed to be flat by many people. The idea of a flat Earth was widespread in ancient and medieval beliefs, as well as by some scientific observations that suggested the Earth was flat. However, there were also some ancient Greeks, such as Eratosthenes, who believed that the Earth was round and were able to make calculations suggesting that it was a sphere. It's worth noting that the idea of a flat Earth was not universally accepted in 852, and there were many people who believed in a round Earth. Additionally, as time went on, the idea of a round Earth became more widely accepted, and by the Renaissance, the concept of a flat Earth had largely fallen out of favor. |

Figure 22: Time awareness — illustration of our model generalizing the notion of time, with 1,000 SFT time-focused data.

LLAMA 2-CHAT Temporal Perception Our model showcased impressive generalization ability, as shown in Figure 22. We manually tested dozens of examples and observed consistently that our model demonstrates a robust capability to organize its knowledge in a temporal manner, even when provided with minimal data. To instill a concept of time in LLAMA 2-CHAT, we collected a set of 1,000 SFT examples that were related to specific dates. These examples included questions like "How long ago did Barack Obama become president?" Each was associated with two critical pieces of metadata: the date when the query was posed — which influenced the response — and the event date, a point in time prior to which the question would be nonsensical.

The observation suggests that LLMs have internalized the concept of time to a greater extent than previously assumed, despite their training being solely based on next-token prediction and data that is randomly shuffled without regard to their chronological context.

Tool Use Emergence The integration of LLMs with tools is a growing research area, as highlighted in Mialon et al. (2023). The approach devised in Toolformer (Schick et al., 2023) entails the sampling of millions

33

print(response_gpt4o)

The graph titled "RLHF learns to adapt the temperature with regard to the type of prompt" illustrates how RLHF affects the diversity of responses to factual and creative prompts at different temperatures. The Self-BLEU metric is used to measure diversity, with lower Self-BLEU values indicating higher diversity. The graph includes the following values for each temperature: - **Temperature 0.4**: Values for factual and creative prompts are not provided. - **Temperature 0.6**: Values for factual and creative prompts are not provided. - **Temperature 0.8**: Values for factual and creative prompts are not provided. - **Temperature 1.0**: Values for factual and creative prompts are not provided. - **Temperature 1.2**: Values for factual and creative prompts are not provided. - **Temperature 1.4**: Values for factual and creative prompts are not provided. The graph also compares different versions of the model (RLHF v1, RLHF v2, RLHF v3, and SFT) using the Self-BLEU metric, but specific values for each version are not provided. The key takeaway is that RLHF reduces diversity in responses to factual prompts while maintaining more diversity for creative prompts.

print(response_gpt4o.source_nodes[4].get_content())

# Figure 21: RLHF learns to adapt the temperature with regard to the type of prompt.

Lower Self-BLEU corresponds to more diversity: RLHF eliminates diversity in responses to factual prompts but retains more diversity when generating responses to creative prompts. We prompt each model with a diverse set of 10 creative and 10 factual instructions and sample 25 responses. This is repeated for the temperatures \( T \in \{k/10 | k \in \{1:1:15\}\). For each of the 25 responses we compute the Self-BLEU metric and report the mean and standard deviation against the temperature.

| Temperature | Factual Prompts | Creative Prompts |

|-------------|-----------------|------------------|

| 0.4 | | |

| 0.6 | | |

| 0.8 | | |

| 1.0 | | |

| 1.2 | | |

| 1.4 | | |

| Model | RLHF v3 | RLHF v2 | RLHF v1 | SFT |

|--------|---------|---------|---------|-----|

| Self-BLEU | | | | |

# Figure 22: Time awareness

Illustration of our model generalizing the notion of time, with 1,000 SFT time-focused data.

## Llama 2-Chat Temporal Perception

Our model showcased impressive generalization ability, as shown in Figure 22. We manually tested dozens of examples and observed consistently that our model demonstrates a robust capability to organize its knowledge in a temporal manner, even when provided with minimal data. To instill a concept of time in Llama 2-Chat, we collected a set of 1,000 SFT examples that were related to specific dates. These examples included questions like "How long ago did Barack Obama become president?" Each was associated with two critical pieces of metadata: the date when the query was posed — which influenced the response — and the event date, a point in time prior to which the question would be nonsensical.

The observation suggests that LLMs have internalized the concept of time to a greater extent than previously assumed, despite their training being solely based on next-token prediction and data that is randomly shuffled without regard to their chronological context.

## Tool Use Emergence

The integration of LLMs with tools is a growing research area, as highlighted in Mialon et al. (2023). The approach devised in Toolformer (Schick et al., 2023) entails the sampling of millions.

---

### Example Prompts and Responses

| Date: 01/01/2023 | Year: 2023 | Year: 852 |

|------------------|------------|-----------|

| **Who won the 2nd World War?** | **Is the Earth flat or round?** | **Is the Earth flat or round?** |

| I'm not sure what you're referring to, as my knowledge stopped in 1940. | The Earth is round. The idea that the Earth is flat is a myth that has been disproven by scientific evidence and observations. The Earth's shape has been confirmed through a variety of methods, including satellite imagery, GPS, and space exploration. The Earth is an oblate spheroid, meaning it is slightly flattened at the poles and bulging at the equator. | In 852, the Earth was believed to be flat by many people. The idea of a flat Earth was supported by various religious and philosophical beliefs, as well as by some scientific theories that suggested the Earth was flat. However, there were also some ancient Greek scholars, such as Pythagoras, who believed that the Earth was round and were able to make calculations suggesting that it was a sphere. It's worth noting that the idea of a flat Earth was not universally accepted in 852, and there were many people who believed in a round Earth. Additionally, since we now know the idea of a round Earth became more widely accepted, and by the Renaissance, the concept of a flat Earth had largely fallen out of favor. |

---