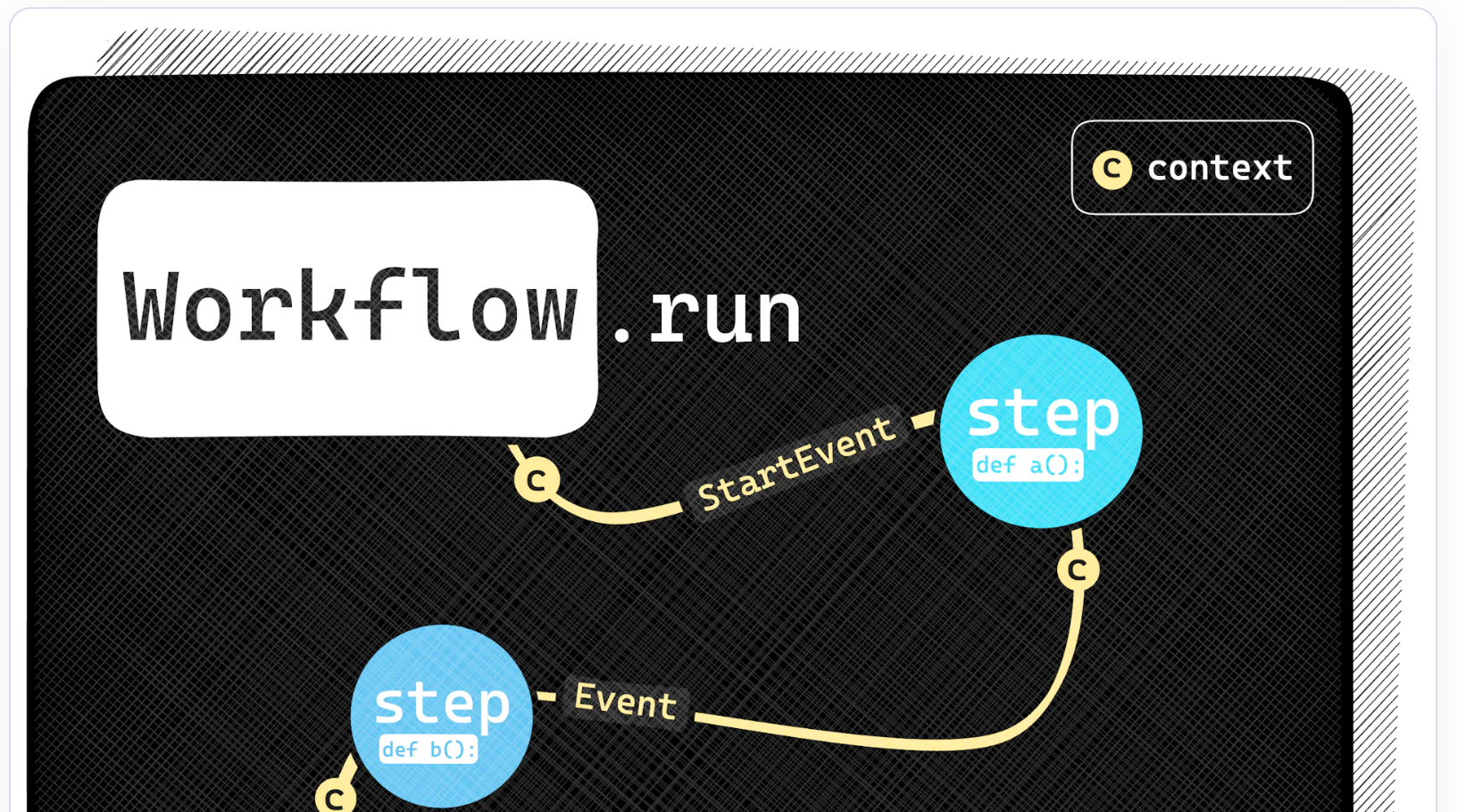

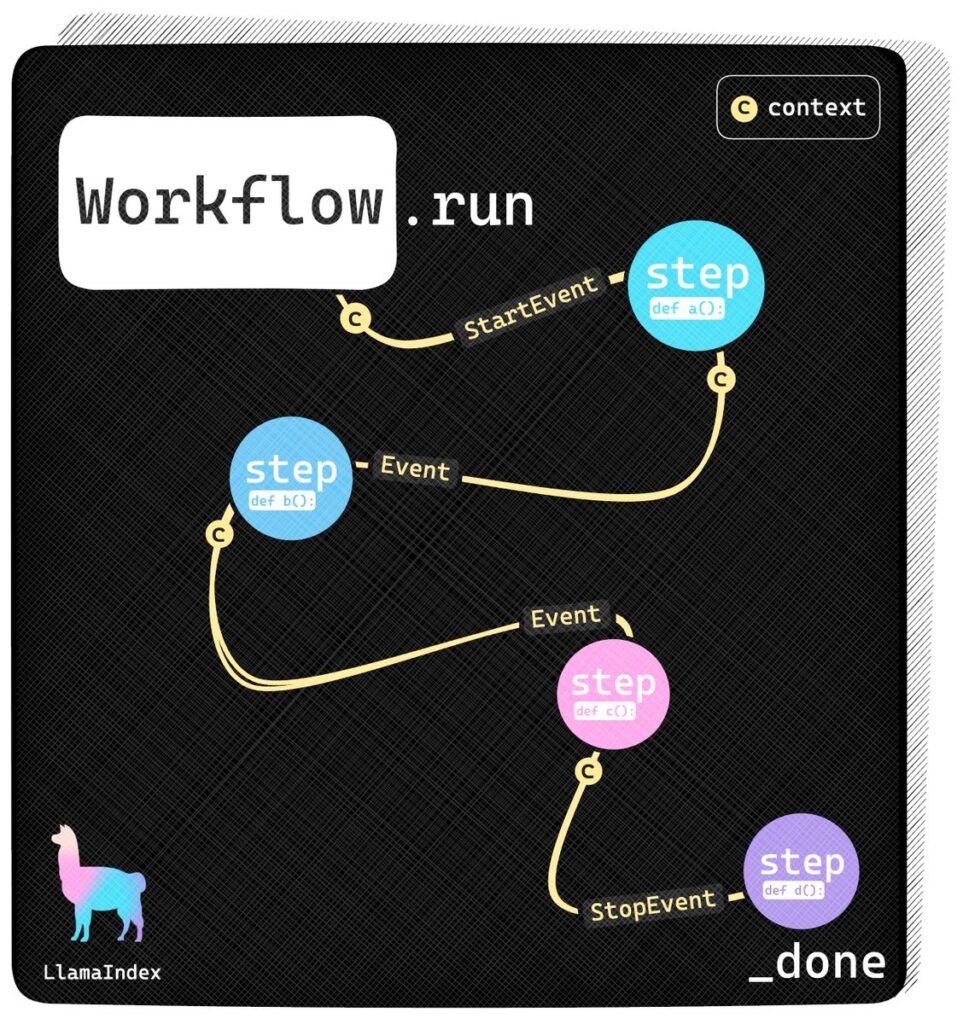

Today, the LLamaIndex Team introduced Workflows—a new event-driven way of building multi-agent applications. By modeling each agent as a component that subscribes to and emits events, you can build complex orchestration in a readable, Pythonic manner that leverages batching, async, and streaming.

Limitations of the Graph/Pipeline-Based Approach

The path to this innovation wasn’t immediate. Earlier this year, we launched our Query Pipeline abstraction, an attempt at LLM orchestration through Directed Acyclic Graphs (DAGs). However, as we worked to improve it, several issues became apparent:

- The orchestration became baked into the edges, making the code cumbersome and difficult to read.

- Once loops were added, there were too many edge cases, making it hard to reason about the logic.

- Debugging was challenging when things went wrong.

Transitioning to Event-Driven Architecture

In an event-driven architecture, components subscribe to events, and you write Python code to handle these events. This approach offers a fully asynchronous framework, simplifying complex operations.

Capabilities of Workflows

With Workflows, you can orchestrate anything from advanced Retrieval-Augmented Generation (RAG), such as query rewriting, reranking, and CRAG, to multi-agent systems.

What’s Next for Workflows

Workflows is still in beta, but we’re working hard to make it the default way of orchestrating within LlamaIndex. The next step includes a native integration with llama-agents, allowing you to translate your multi-agents into services. Stay tuned for more updates.

For more information, check out the following resources:

Read related articles: