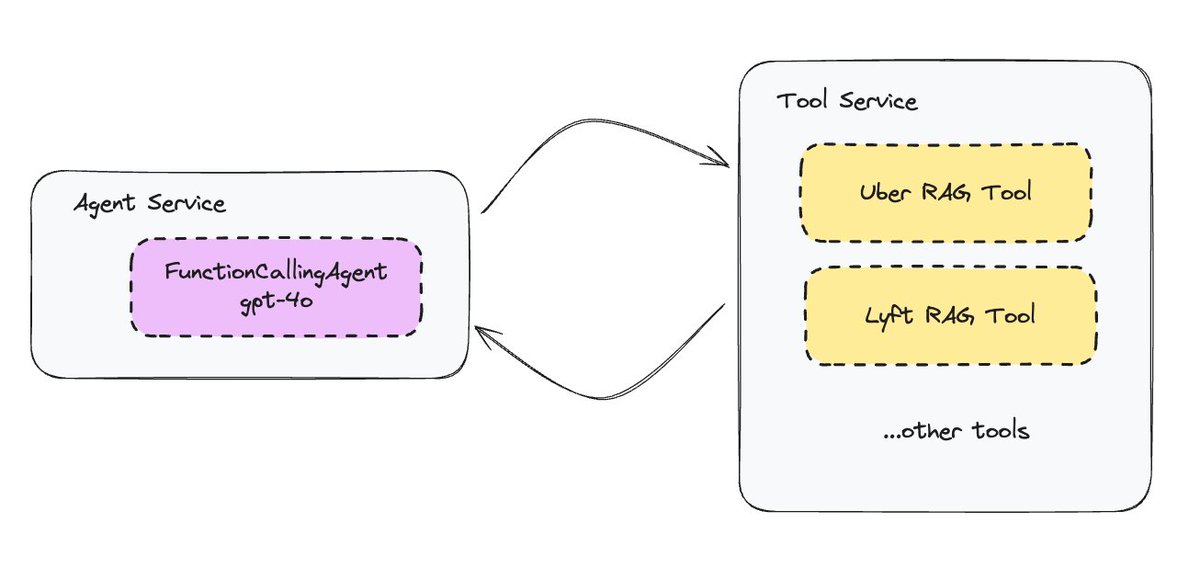

Let’s take the common pattern of agents interacting with tools, and turning them into microservices.

In llama-agents we allow you to setup both an agent service, which takes in a user input and performs reasoning about the next task to solve, and also a tool service, which can execute any variety of tools and exposes a high-level API interface. They can communicate with each other through a message queue. llama-agents lets you do this. Setup both a standalone agent server and a tool server.

What is LLama Agent?

Llama-agents is an async-first framework designed for building, iterating, and deploying multi-agent systems. It supports multi-agent communication, distributed tool execution, human-in-the-loop processes, and more.

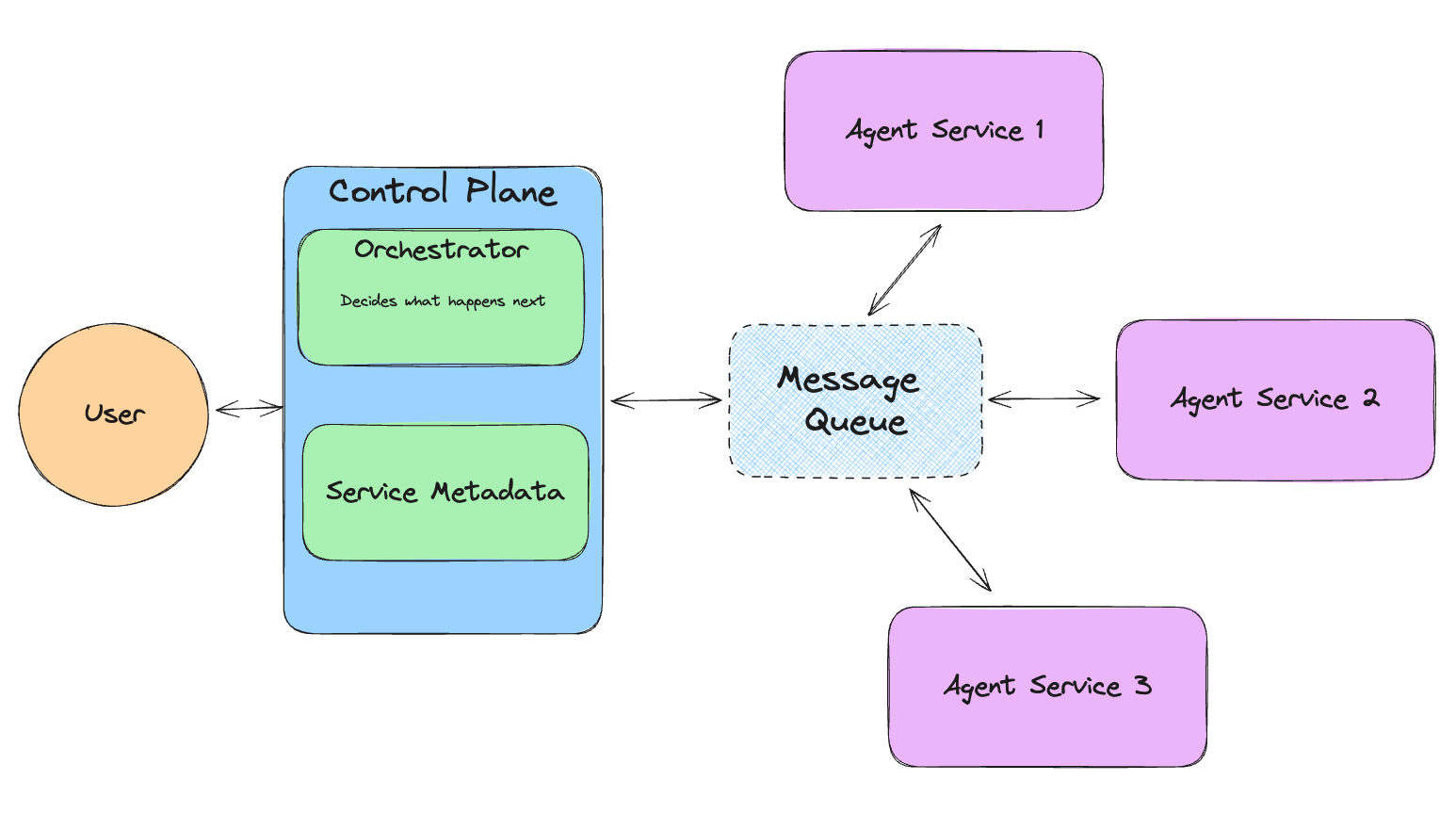

In llama-agents, each agent operates as a service, continuously processing incoming tasks by pulling and publishing messages from a message queue.

At the core of a llama-agents system is the control plane. The control plane tracks ongoing tasks, manages the network’s services, and uses an orchestrator to assign the next step of a task to the appropriate service.

The overall system layout is illustrated below.

Sure! Here’s the rephrased text:

Components of a Llama-Agents System

Llama-agents consists of several key components that make up the overall system:

- Message Queue: The message queue acts as a queue for all services and the control plane. It provides methods for publishing messages to named queues and delegates messages to consumers.

- Control Plane: The control plane serves as the central gateway to the llama-agents system. It tracks current tasks and manages the services registered in the system. It also includes the orchestrator.

- Orchestrator: This module handles incoming tasks, decides which service to send them to, and manages the handling of results from services. An orchestrator can be agentic (with an LLM making decisions), explicit (with a query pipeline defining a flow), a mix of both, or something completely custom.

- Services: Services are where the actual work takes place. A service accepts an incoming task and context, processes it, and publishes a result. A tool service is a special type of service used to offload the computation of agent tools. Agents can be equipped with a meta-tool that calls the tool service.

Low-Level API in Llama-Agents

So far, you’ve seen how to define components and launch them. However, in most production use cases, you will need to manually launch services and define your own consumers. Here’s a quick guide on how to do exactly that!

Read related articles: