Excitement surrounds the release of two new open-source models designed specifically for tool use: Llama-3-Groq-70B-Tool-Use and Llama-3-Groq-8B-Tool-Use, built with Meta Llama-3. Developed in collaboration with Glaive, these models mark a significant advancement in open-source AI capabilities for tool use and function calling.

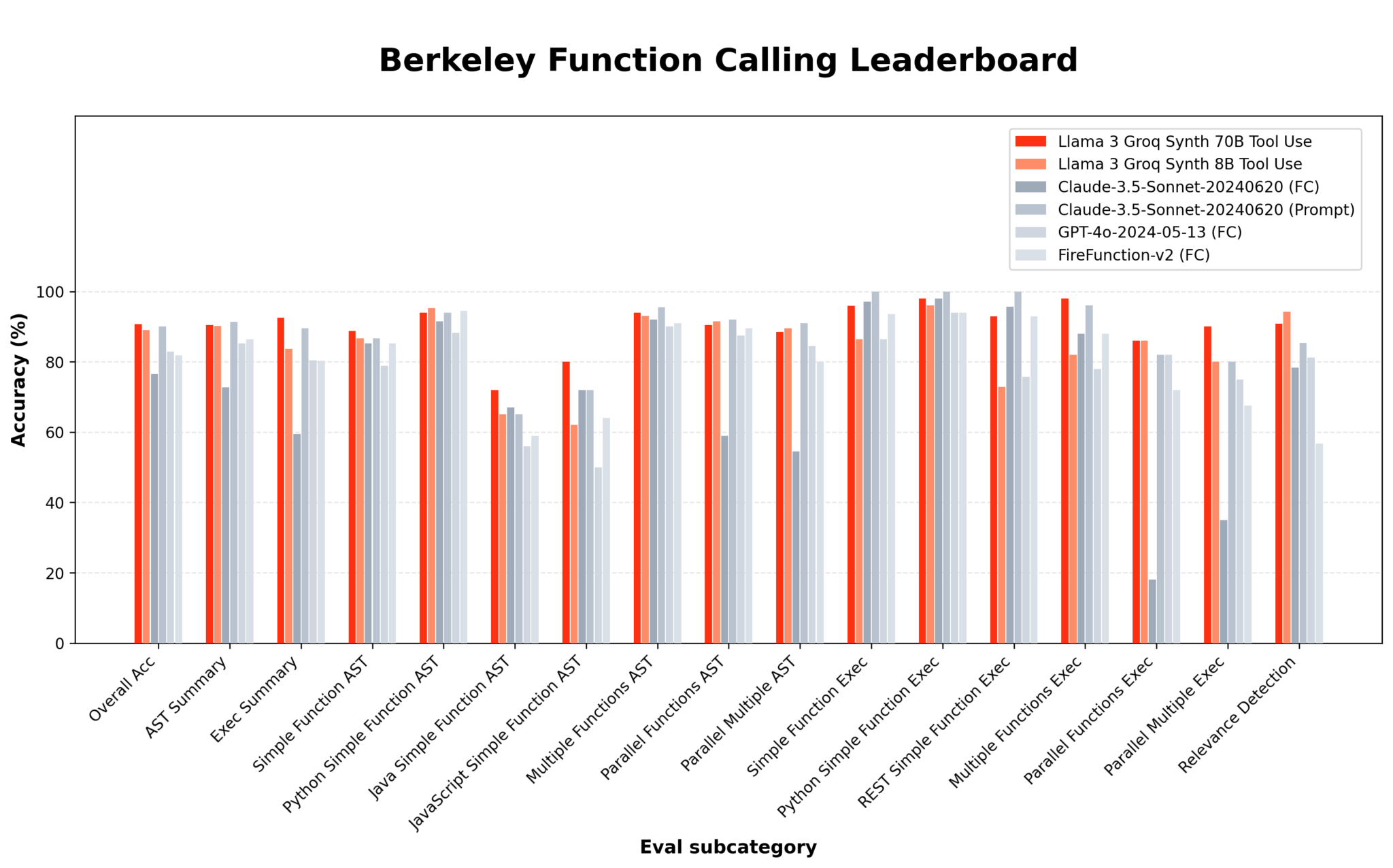

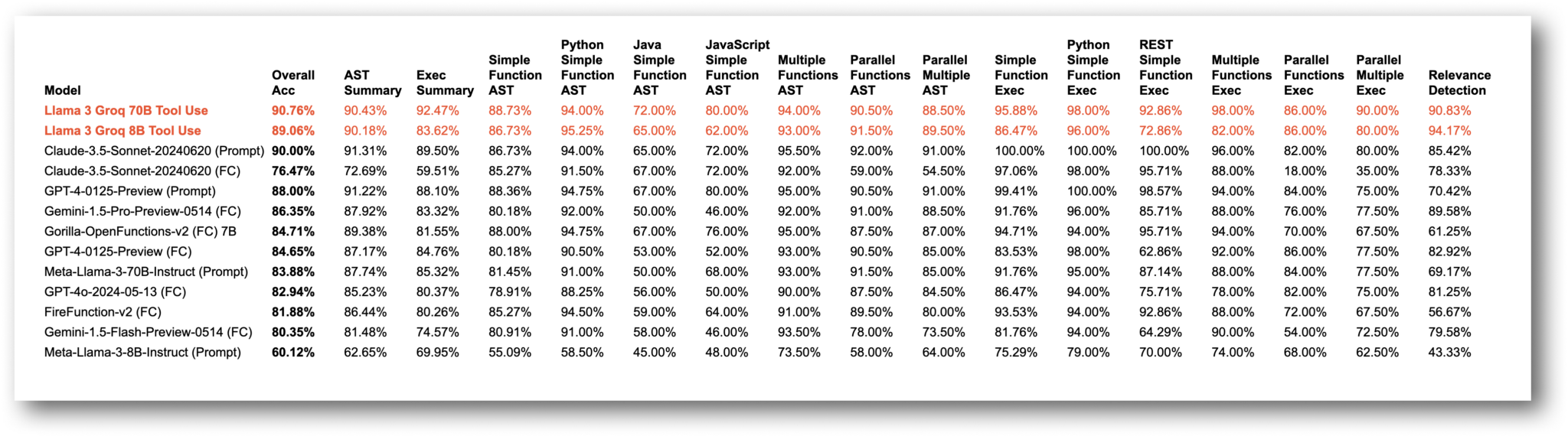

Llama-3-Groq-70B-Tool-Use has distinguished itself as the top performer on the Berkeley Function Calling Leaderboard (BFCL), surpassing all other open-source and proprietary models.

Model Details

Availability: Both models are now available on the GroqCloud™ Developer Hub and on Hugging Face:

Licensing: These models are released with the same permissive license as the original Llama-3 models.

Training Approach: The models were fine-tuned using a combination of full fine-tuning and Direct Preference Optimization (DPO) to achieve state-of-the-art tool use performance. No user data was used in the training process, only ethically generated data.

Benchmark Results

The models have set new benchmarks for large language models with tool use capabilities:

- Llama-3-Groq-70B-Tool-Use: Achieved 90.76% overall accuracy (#1 on BFCL at the time of publishing).

- Llama-3-Groq-8B-Tool-Use: Achieved 89.06% overall accuracy (#3 on BFCL at the time of publishing).

Benchmark results were obtained using the open-source evaluation repository ShishirPatil/gorilla on commit 7bef000.

Overfitting Analysis

A thorough contamination analysis using the LMSYS method revealed low contamination rates for the synthetic data used in fine-tuning: 5.6% for the SFT data and 1.3% for the DPO data relative to test set data in BFCL, indicating minimal to no overfitting on the evaluation benchmark.

General Benchmark Performance

A carefully selected learning schedule was employed to minimize the impact on general-purpose performance.

Specialized Models & Routing

While the Llama-3 Groq Tool Use models excel at function calling and tool use tasks, a hybrid approach is recommended, combining these specialized models with general-purpose language models. This strategy leverages the strengths of both model types to optimize performance across a wide range of tasks.

Recommended approach:

- Query Analysis: Implement a routing system to analyze incoming user queries to determine their nature and requirements.

- Model Selection: Route requests based on the query analysis:

- For function calling, API interactions, or structured data manipulation, use the Llama-3 Groq Tool Use models.

- For general knowledge, open-ended conversations, or tasks not specifically related to tool use, use a general-purpose language model like the unmodified Llama-3 70B.

This routing strategy ensures that each query is handled by the most suitable model, maximizing the overall performance and capabilities of the AI system. It allows harnessing the specialized tool use abilities of the Llama-3 Groq models while maintaining the flexibility and broad knowledge base of general-purpose models.

Conclusion

The Llama-3 Groq Tool Use models represent a significant step forward in open-source AI for tool use. With state-of-the-art performance and a permissive license, these models are expected to enable developers and researchers to push the boundaries of AI applications in various domains.

The community is invited to explore, utilize, and build upon these models. Feedback and contributions are crucial for continuing to advance the field of AI together.

With anticipation for more innovation and experimentation with open-source models, higher scores on BFCL are expected, reaching full saturation on the benchmark and solving the first inning of tool use for LLMs.

Start Building

Both Llama-3-Groq-70B-Tool-Use and Llama-3-Groq-8B-Tool-Use are available for preview access through the Groq API with the following model IDs:

- llama3-groq-70b-8192-tool-use-preview

- llama3-groq-8b-8192-tool-use-preview

Get started in the GroqCloud Dev Hub today!

Read related articles: