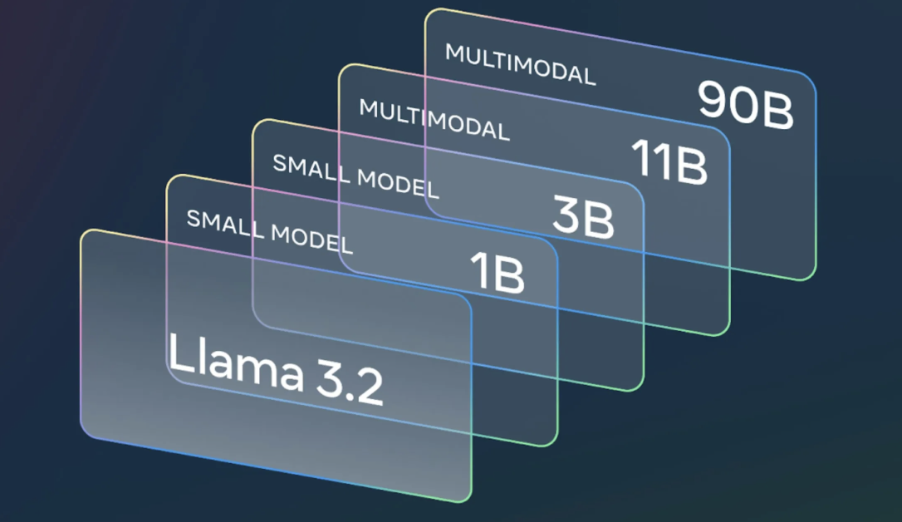

The two largest models in the Llama 3.2 collection, the 11B and 90B, are designed for image reasoning tasks such as document-level comprehension, including interpreting charts and graphs, image captioning, and visual grounding tasks like identifying objects in images based on natural language prompts. For instance, someone could ask which month in the previous year had the best sales for their small business, and Llama 3.2 could analyze a graph and quickly provide the answer. In another scenario, the model might analyze a map to determine when a hike becomes steeper or calculate the distance of a specific trail. These models also bridge the gap between vision and language, extracting key details from an image, interpreting the scene, and generating descriptive captions that help convey the story behind the image.

The smaller, lightweight models, 1B and 3B, excel at multilingual text generation and tool integration. These models enable developers to create personalized, on-device applications that prioritize privacy by keeping data local. For example, an app could summarize recent messages, identify action items, and use tool-calling features to schedule follow-up meetings by sending calendar invites directly.

Running these models locally offers two key benefits: first, prompt responses are almost instantaneous since all processing occurs on the device. Second, it ensures privacy by keeping sensitive information like messages and calendar data off the cloud. Since the processing is done locally, the app can determine which tasks stay on the device and which might require larger cloud-based models.

Llama 3.2 Model Evaluations

Our assessments indicate that the Llama 3.2 vision models are on par with top foundation models, such as Claude 3 Haiku and GPT4o-mini, in tasks related to image recognition and visual understanding. The 3B model surpasses the Gemma 2 2.6B and Phi 3.5-mini models in areas like instruction following, summarization, prompt rewriting, and tool use, while the 1B model shows performance comparable to Gemma.

We conducted evaluations across more than 150 benchmark datasets covering a variety of languages. For the vision LLMs, we specifically assessed their performance on benchmarks focused on image understanding and visual reasoning tasks.

Visit HuggingFace: https://huggingface.co/meta-llama

Read related articles: