In this article, we will delve into LLama-2: what it is, how it is trained, its comparison to GPT variants and ChatGPT, as well as its architecture and code. We aim to offer a comprehensive understanding of this technological advancement.

Introduction to LLama-2

LLama-2 is a suite of pre-trained language models, while LLama2 Chat represents a fine-tuned chatbot utilizing reinforcement learning through human feedback. This methodology is analogous to GPT-3, which also comprises pre-trained language models, and ChatGPT, a fine-tuned chatbot benefiting from similar reinforcement learning.

Thus, LLama-2 stands comparable to GPT-3, and LLama2 Chat aligns with ChatGPT in their respective domains.

Understanding the Basics

Pre-Trained Language Models

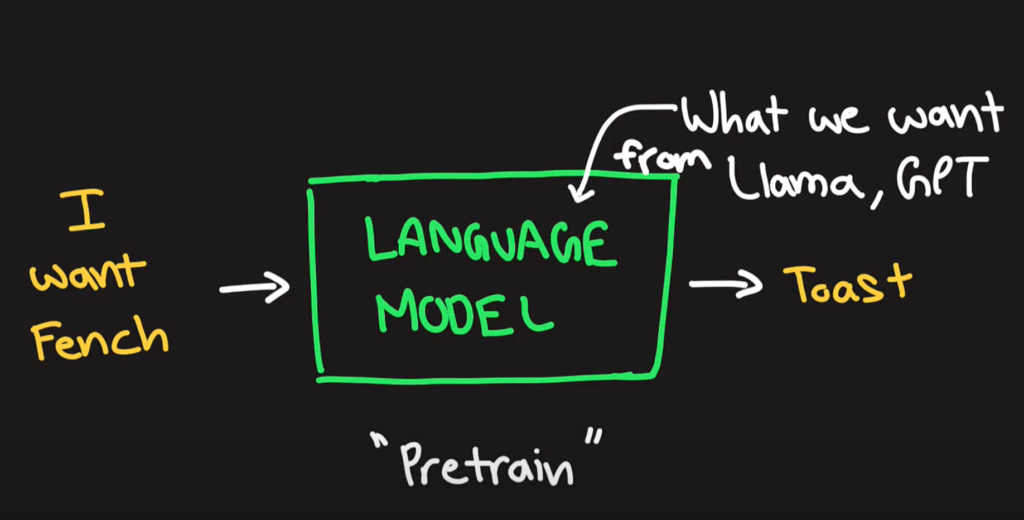

Language models predict the next word in a sequence based on the preceding ones.

To achieve this, LLama2 and GPT models are fed hundreds of thousands of example sequences to fine-tune their parameters. This process is known as pre-training, preparing the architecture to effectively perform language modeling.

Fine-Tuned Chatbots

For chatbots like LLama2 Chat and ChatGPT, the goal extends to answering input questions accurately.

Despite still being under the training phase, this specific tuning is referred to as fine-tuning, where the model’s understanding of question-answering is refined.

Reinforcement Learning Through Human Feedback

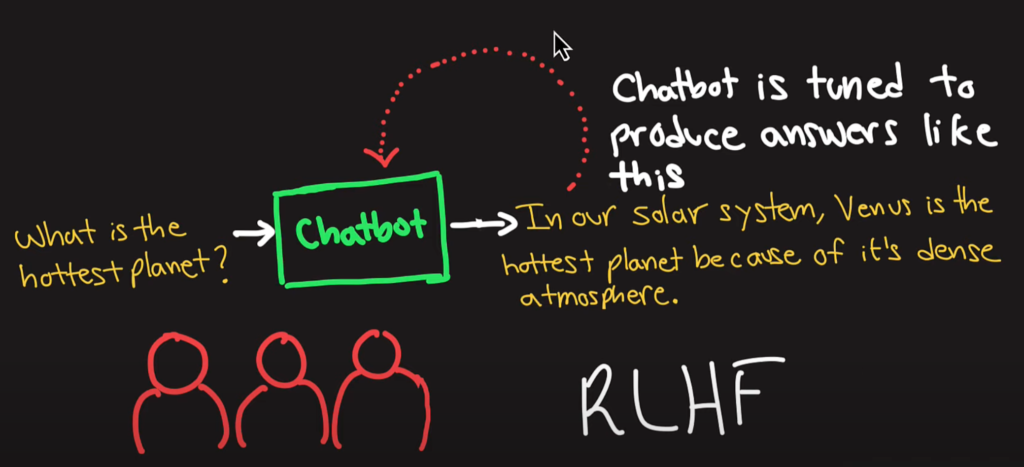

Upon being trained to answer questions, chatbots can generate multiple responses to the same query. Human reviewers then evaluate these responses to determine the most appropriate one.

This assessment acts as feedback for the chatbot, encouraging it to produce more answers of similar quality. This entire process encapsulates reinforcement learning with human feedback, optimizing the chatbot’s performance.

Comprehensive Training Overview

The training journey begins with an untrained model, progressing through language modeling with extensive examples. This results in LLama-2, a pre-trained language model. Further training on question-answering refines it into LLama2 Chat, a model adept at generating multiple plausible responses to a single question. Human feedback then fine-tunes these responses, culminating in a chatbot that is safe, helpful, and non-toxic.

Model Architecture and Code

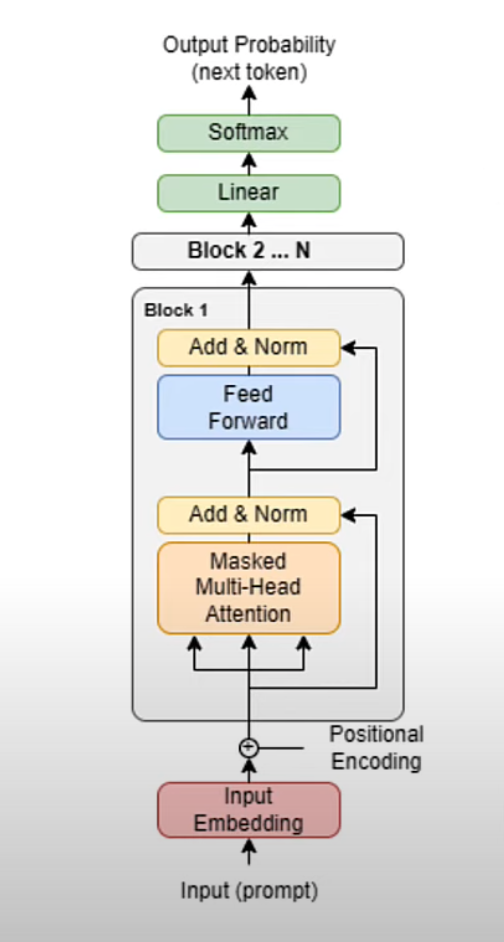

LLama-2 employs a decoder-only Transformer architecture, streamlined for efficiency. Although the model and its architecture are approximate, they closely resemble the actual structure, with minor differences in the sequence and application of normalization layers.

Code Insight

The Facebook research repository provides access to LLama-2’s model architecture. Despite its complexity, the core Transformer block and the model’s forward pass are succinctly encapsulated within a few hundred lines of code, demonstrating the model’s operational essence.

Comparing LLama and GPT Architectures

Several distinctions set LLama apart from GPT models:

- Open Source vs. Proprietary: LLama’s codebase is open source, offering transparency in its operations, unlike GPT’s more closed approach.

- Data Training: LLama is trained on public data, facilitating a broader understanding and accessibility.

- Resource Efficiency: LLama demands fewer resources for fine-tuning, presenting a more accessible option for developers.

- Model Size and Performance: Despite its smaller size, LLama’s performance is on par with or even exceeds that of GPT in many areas, thanks to its extensive training data.

Getting Started with Coding

For those interested in utilizing LLama models, the Hugging Face repository offers pre-trained models and fine-tuning instructions. Additional tools like Colora enhance the training process, making model development more accessible.

Conclusion

We have explored the intricacies of LLama-2 and LLama2 Chat, from their foundational training processes to their sophisticated architecture and code. By comparing LLama to GPT variants, we’ve highlighted its advantages in openness, efficiency, and performance. With resources readily available for developers to dive into, LLama-2 stands as a testament to the ongoing evolution in the field of AI language models.

Thank you for joining this exploration of LLama-2. We hope this article has illuminated the model’s capabilities and potential applications.